When building your Linux-based camera product (which happens a lot at Antmicro), most of the time you’ll be playing around with various options to adjust before you arrive at your desired result, like exposure, gain, or contrast. We have not seen a go-to tool that would give you the capability to quickly change camera settings from the command line, for simple systems that do not have a GUI, or remote work over UART or SSH.

Usually, for cases where there are lots of parameters of different kinds, GUI applications are developed, as they can include components ideal for parameter manipulation, like sliders, combo boxes, or lists. Still, a GUI usually also requires a window manager, a display, a keyboard and a mouse, and sometimes the terminal will remain the only available option.

From our experience with software development (and gaming!) we know that, with the right approach, lots of parameters can be easily manipulated with just a keyboard, and there are ways to build text-based UIs that can be just as informative as a GUI. For this use case, we saw value in recreating some popular GUI widgets in the CLI.

Workflow improvements are always on Antmicro’s radar, as we work with a variety of tools that enable fewer people to do more, quicker - and an open source approach lies at the heart of our work. That is why we’re releasing pyvidctrl - a text-based UI (TUI) application for setting camera parameters that introduces the comfort of the GUI application to the CLI, while preserving the CLI’s simplicity and usability.

The pyvidctrl tool

The ‘pyvidctrl’ tool is implemented in Python 3, and is available as a PIP package.

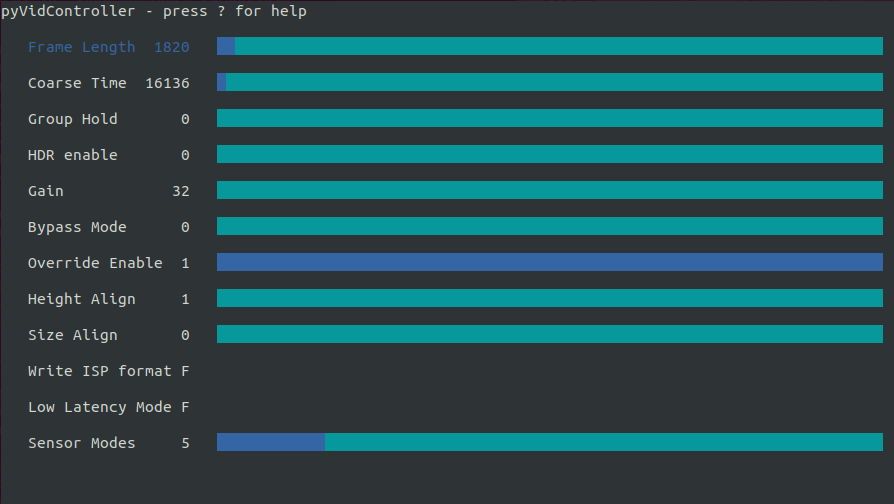

Here is a glimpse at the tool’s TUI:

The tool queries the camera via ioctl about available V4L2 controls, and prints the settings along with their current values. You can later move between those settings and manipulate them with ease with a keyboard. The changes in settings will be immediately sent to the camera.

Such a UI allows changing all your camera parameters without the hassle of retyping commands. It conveniently visualizes the parameters and their ranges, so you can simply check what are the possibilities with your current settings. The tool also allows observing how the view in the camera feed changes according to the gradual modifications of the selected parameters. This helps adjusting the camera without the usual effort of making e.g. multiple v4l2-ctl command calls.

Pyvidctrl supports selecting the camera, in case there are multiple cameras connected to the device. What is more, you can also store the settings for the camera in a JSON file and have them restored later. With the generated JSON storing the settings for the camera, you can easily distribute settings between multiple cameras and devices, and make your settings persistent between shutdowns by placing pyvidctrl --restore in the startup scripts.

Use case: controlling demos with multiple cameras in unpredictable lighting conditions

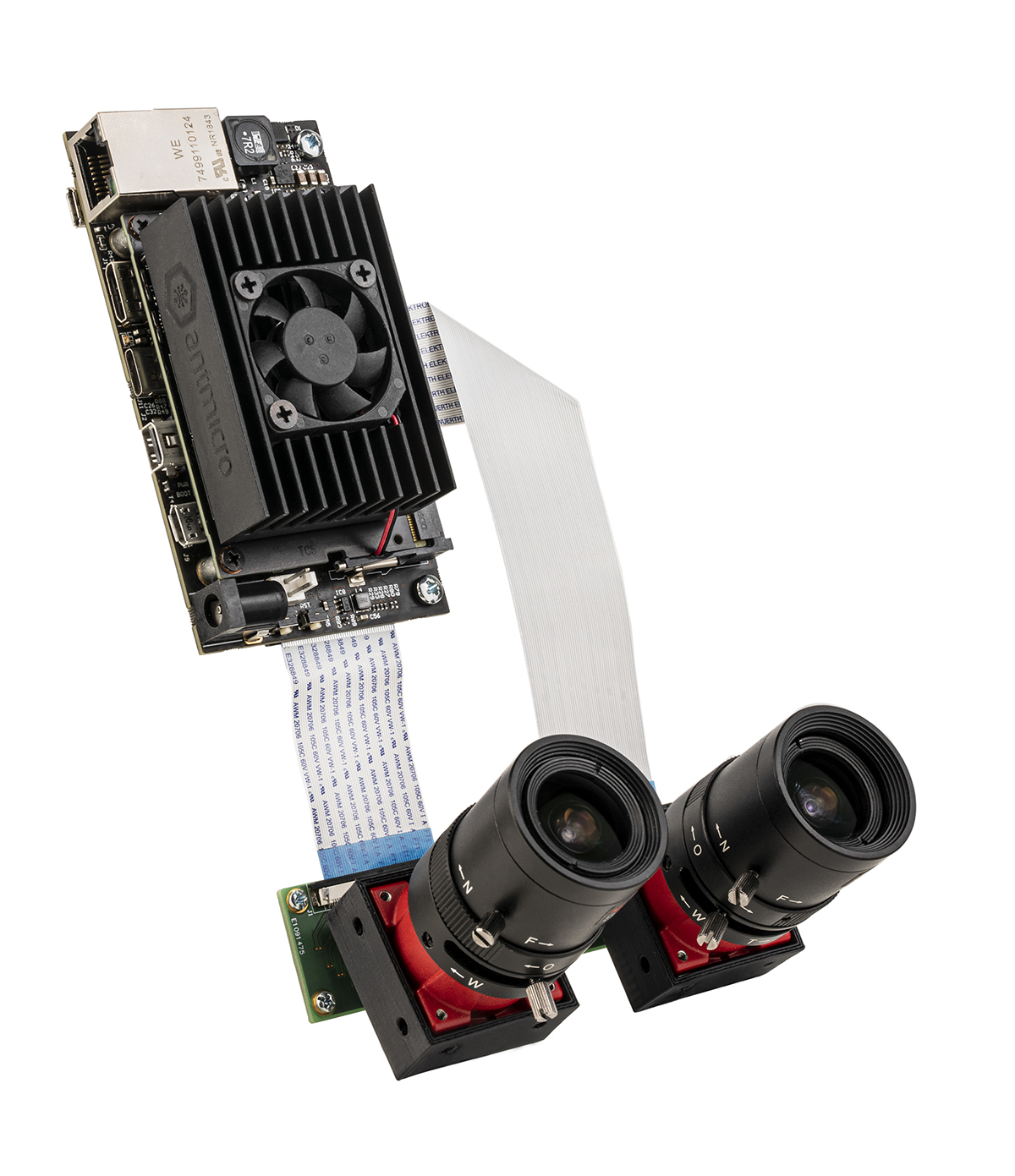

The pyvidctrl application uses the standard V4L2 APIs, which makes it universal and compatible with virtually any camera running on Linux. That means that it also works seamlessly with Antmicro’s open Jetson Nano baseboard that supports multiple Allied Vision Alvium cameras, which is a great base for real-time AI use cases such as object counting and tracking. Testing such algorithms in real-world settings can be challenging because the lighting environment may be hard to predict and change over the course of the day. You might want to experiment with different parameters, but every such change often requires some adjustments in the camera settings. With the use of the pyvidctrl script we are able to remotely change the camera settings to fit the light conditions without closing the application or connecting a keyboard or mouse. Thanks to the feature of storing the setting we are also able to preserve the settings between test runs.

Start using pyvidctrl

If you are interested in pyvidctrl and would like to use it in your device, you can download its dedicated Github repository. The instructions for installing and using the software are provided within the project’s README and the application itself. Let us know what you think! And especially if you have challenging use cases that involve developing hardware or software for new camera devices, managing/fusing many camera streams and building FPGA or GPU-based AI applications on top of the video input, we’re always happy to help.