Speech recognition with I2S in Zephyr and TensorFlow Lite

Published:

Topics: Open FPGA, Open OS

Machine learning is still predominantly done in the cloud, which in many use cases may result in unnecessary latency, excessive power consumption and dependency on the availability of a wireless connection. Thanks to the recent developments in the area of machine learning on microcontrollers and FPGAs (a domain that Antmicro is currently heavily involved in), small devices become powerful enough to locally perform machine learning tasks, such as image or voice recognition.

Machine learning and FPGAs

Our experience in machine learning involves creating sophisticated AI algorithms, building ML/AI accelerators, as well as designing dedicated drivers and providing tooling that streamlines ML development. While many of our AI jobs target higher-end platforms such as NVIDIA Xavier NX, Google Coral or Xilinx UltraScale+, increasingly customers are also looking at implementing ML on much smaller devices.

In a recent collaboration with Google we have enabled running their TensorFlow Lite machine learning framework on an FPGA platform based on our go-to soft SoC generation framework, LiteX. The project brought TF Lite to FPGAs for the first time, meaning that a whole new set of embedded and IoT devices can now benefit from the capabilities of Google’s framework as developers are able to deploy ML models on them for gesture and voice recognition, keyphrase detection, etc. It has also opened the door for further optimization of ML applications using dedicated HW accelerators in the FPGA. We have described this work and demonstrated running and testing the ML framework in our open source simulator Renode in an article on the TF Lite blog. Importantly, as part of the effort, we integrated TF Lite Micro with the Zephyr real time operating system. The RTOS is experiencing an unprecedented period of growth, with both Google and Facebook joining as Platinum members. The integration with Zephyr enables adding new platforms and applications relatively quickly, as this note will show.

The setup and architecture overview

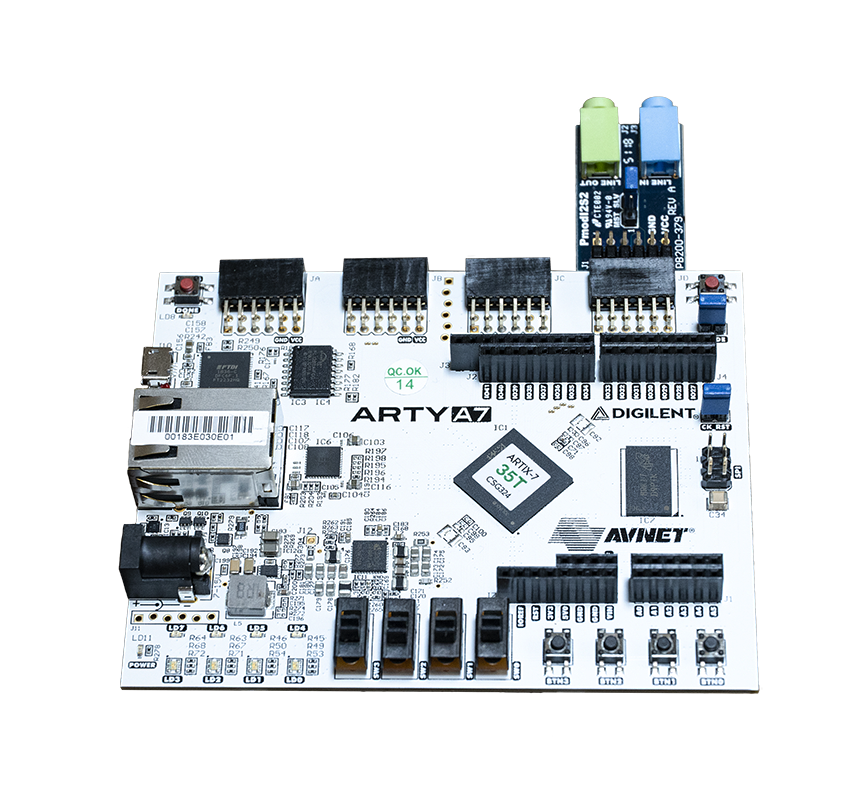

This development makes it possible to perform speech recognition in systems running LiteX-based soft SoCs with I²S - a popular serial bus interface standard used for connecting digital audio devices. The setup used as an example consisted of an Arty A7 board from Digilent and the Pmod I2S2.

The setup used as an example consisted of an Arty A7 board from Digilent and the Pmod I2S2.

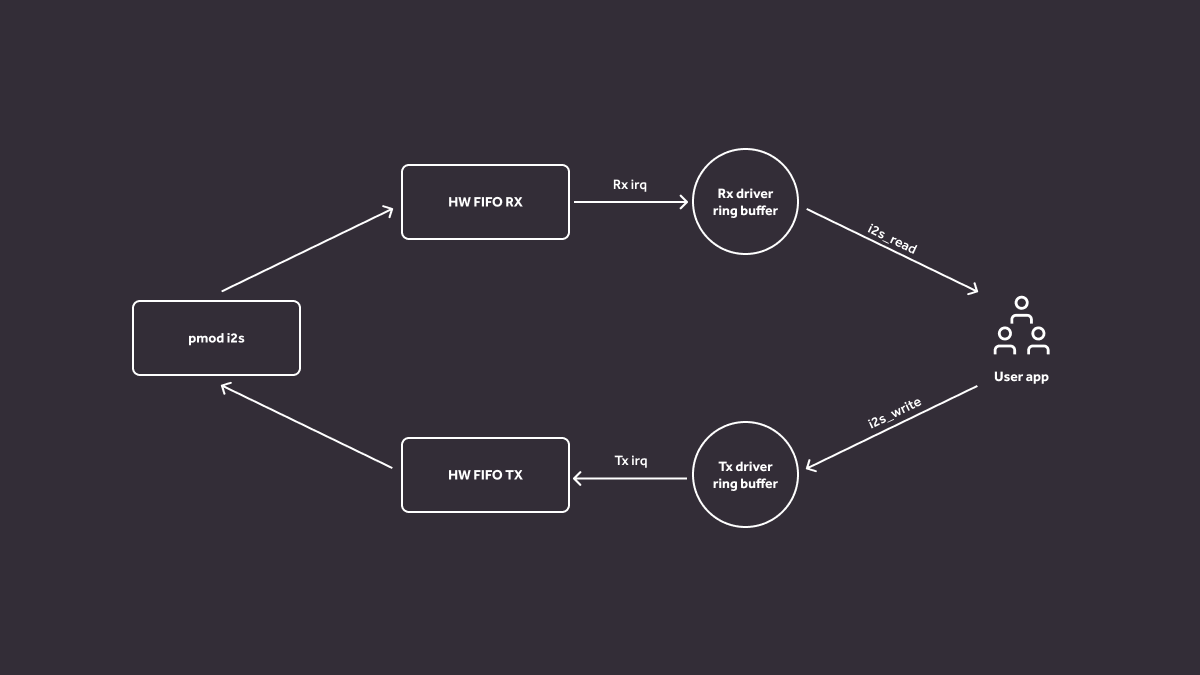

In the demo (instructions are available on our GitHub) , the ADC (analog-to-digital converter) chip on the Pmod collects an analog signal, samples it, converts it into a digital signal and sends it via the I²S to the FPGA, where the LiteX-based IP core that we expanded picks it up in the PCM format. Then, the Zephyr driver reads the data from the FIFO buffer in real time and makes it available to the TF Lite application, where speech recognition is performed.

LiteX gets sound with Zephyr

To achieve the result described above, first we had to expand the I²S support in LiteX so that it could be configured as master, which allowed the interface not only to play sound but also capture it. As the next step, we developed a Zephyr driver that enabled the I²S to communicate with the Pmod and allowed the CPU to process the received data. We also wrote a software interface in the TF Lite speech recognition demo for extracting sound from the Zephyr driver and passing it to the neural network.

Originally, the LiteX-based FPGA IP core supported only stereo data with 24 bits per sample so, as the final piece of work, we extended it with formats required by the speech recognition demo e.g. mono 16 bits. The neural net was able to recognize words “yes” and “no”, which is essentially the point of the demo and validates our FPGA/software design.