Antmicro’s services cover the whole system development process, from a system-on-chip, to a hardware platform with multiple I/O options, to camera boards and dedicated drivers, up to customized operating systems and advanced AI algorithms. As a result of our practice of using open source technologies, which we have the expertise to customize and fine tune in order to meet specific design requirements, our clients get full-control over a future-proof product that fully satisfies their needs. We also actively contribute to various spheres of the open source ecosystem, e.g., with a range of hardware platforms, boards and accessories that can be used to kick-start system prototyping, such as the recently open-sourced UltraScale+ Processing Module that is a part of our high-speed 3D camera solution for AI-enhanced real-time video analysis.

Achieving high-speed to automate complex tasks in real-time

This industrial stereovision camera system is one of the many 3D vision systems we have built throughout the years. It is a high-end, Linux-driven hybrid FPGA + GPGPU solution that delivers top performance in the EU-funded X-MINE industrial scanner that has been deployed in mines in Sweden, Greece, Bulgaria and Cyprus to automate ore and rock sorting.

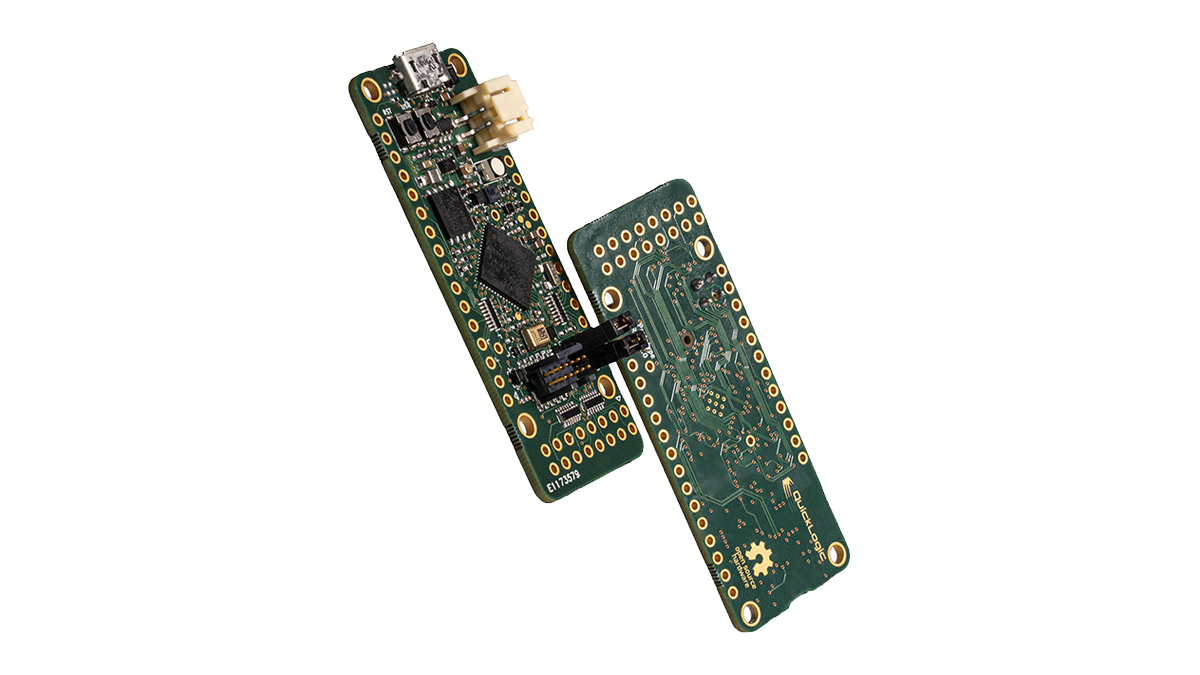

Our 3D camera system combines a Xilinx UltraScale+ FPGA SoC for interfacing video sensors and other low-level vision tasks with an NVIDIA Jetson TX2 equipped with an embedded CUDA-enabled GPU - which handles the demanding image processing tasks, such as image rectification, disparity calculation and 3D reprojection. The Jetson TX2 also runs the main ore identification/tracking application as well the TCP network server, exposing the 3D vision data to other elements of the X-MINE system, which include 2D and 3D X-Ray data and other sensors.

The sorting system’s architecture and dynamic pace of its operation required low-latency and synchronicity of data processing. Using the high-speed FPGA processing capabilities of UltraScale+ and a dedicated C++ video import module on the TX2 side to bypass the system’s video buffer and allow writing to a memory area indicated by the user (in this case - the scanner application module responsible for CUDA image processing), we were able to obtain a camera-to-data frame latency of around 50ms, hardly noticeable to the human brain.

Customized Linux, flexible FPGA design, robust application

The software used in the 3D scanner includes a custom, industrial Yocto-based Linux running on the TX2 and an even more compact Buildroot-based Linux distribution running on the UltraScale+. Both of the OS images are defined as a set of config files and build-recipes, making it easy to reproduce, back-trace and maintain them. To prevent unpredictable system behavior, transactional remote updates are done in the background using a shadow system image (i.e. an inactive copy of the system) without interrupting the scanner’s operation, while key system components are placed on a read-only partition. We do a lot of work related to customizing Linux distributions, building over-the-air update systems and edge AI pipelines for specific use cases.

Coming back to the the FPGA part, the programmable logic is connected to two CMV2000 industrial camera sensors and features some of our open source IP cores, e.g. HDMI transmitter and AXI display controller. It does initial image processing and passes the data from both cameras over HDMI to the TX2 for further processing. We often use FPGA technologies in our vision systems, leveraging their parallel computing capabilities and relying on our extensive portfolio of vendor-neutral and customizable open source FPGA IP cores, including high-speed interfaces such as PCI Express, Ethernet, memory controllers, camera interfaces, video and audio codecs, etc.

The 3D scanner application is mostly written in Python and C++, it uses the OpenCV library with a CUDA extension and is monitored by a watchdog process.

Reducing cost and time

In the context of the X-MINE project, our camera system provides a 3-dimensional description of the observed scene by generating a depth map, where the brightness level represents the objects’ relative height (distance to the belt surface), and by performing object detection. This way it determines the size and position of the chunks travelling on the conveyor belt in real time, with the results being combined with the data from the the X-ray scanner provided by our partners Orexplore to achieve a faster, cost-effective and more sustainable sorting procedure.

Keep in mind that our advanced camera solution originally designed for X-MINE can be customized to meet the needs of other demanding projects that aim to automate processes across a range of industrial areas such as robotics, manufacturing and autonomous vehicles. We develop various FPGA and GPU-based 3D vision systems and AI pipelines using portable open source building blocks so if you are looking to build an advanced edge AI device that you get full control over, reach out to us at contact@antmicro.com to learn more about how our commercial system development services can help you achieve better results.