ROS-based tester for tracking and detection algorithms

Published:

Topics: Edge AI, Open machine vision

For more than a decade Antmicro has been helping customers from a range of industrial sectors adopt real time AI applications that automate complex tasks and significantly improve work in terms of efficiency, time and cost. Since the devices we build often perform operations that influence the safety and security of critical infrastructure or people, they must rely on fast and accurate algorithms, often running on limited hardware. However, deploying robust solutions on edge AI hardware with constrained resources is a difficult and demanding task which often calls for the use of multiple algorithms managed by a decision-making node interoperating to achieve the desired result - a process called policy. In such a complex system of interconnected nodes, replacing an algorithm or making other changes intended to improve performance in one set of use cases may significantly reduce quality in other scenarios. Analysing the accuracy of such systems by a human is highly prone to error so automating the testing process and being able to retrieve relevant data is key for ensuring the highest possible quality, and that’s exactly what our ROS tracking tester does.

Distributed system with traceable results

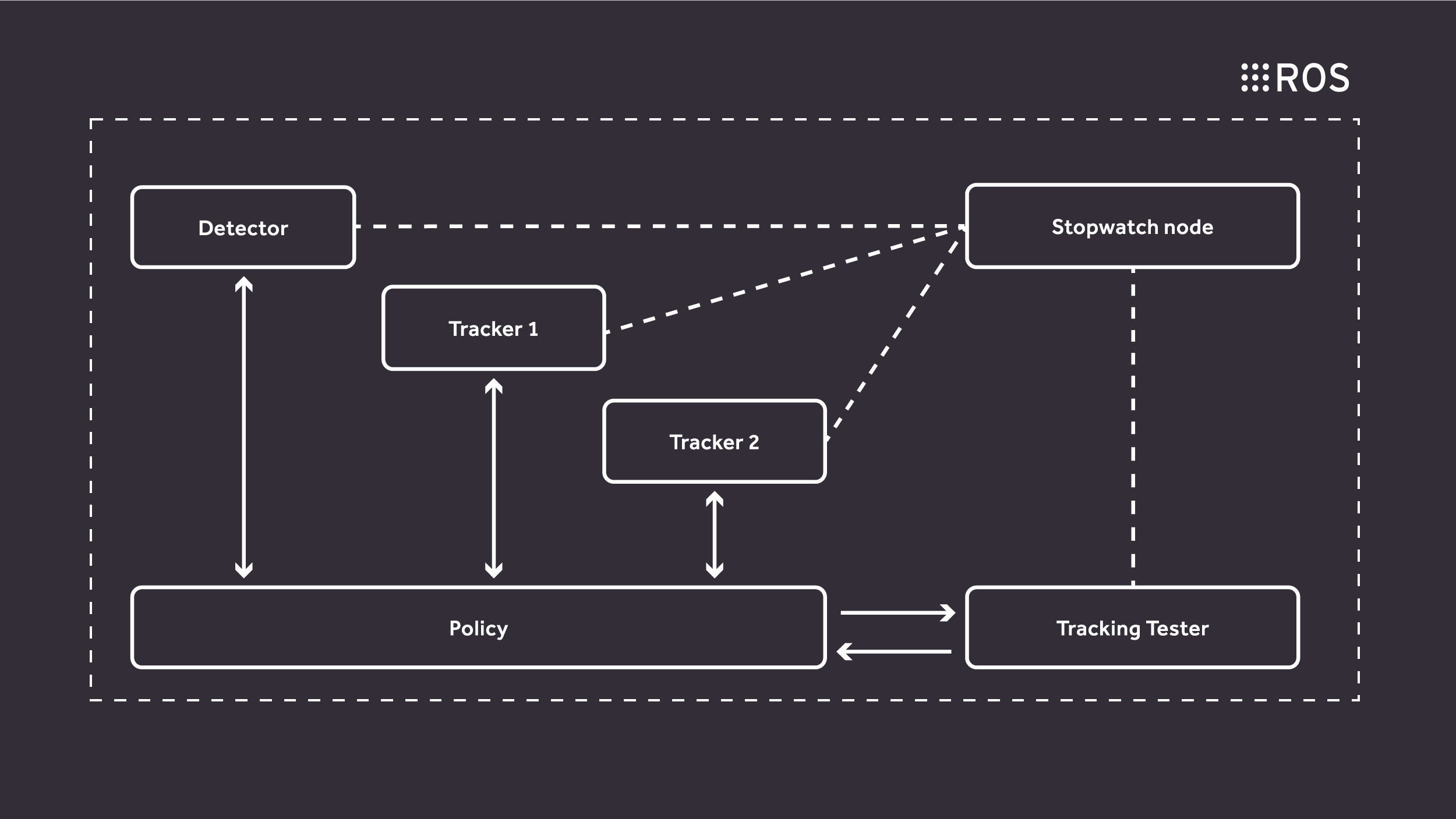

Object detection and tracking in video feeds is a popular computer vision problem, especially in robotics, surveillance, defense and industrial automation. In our edge AI projects we typically approach this issue by going for a modular composition, which enables testing individual parts of the system during the design phase. Real use scenarios require a combination of algorithms, which have varying strengths and weaknesses so it is extremely important to be able to measure, quantify and compare them so that issues can be analyzed and eliminated early.

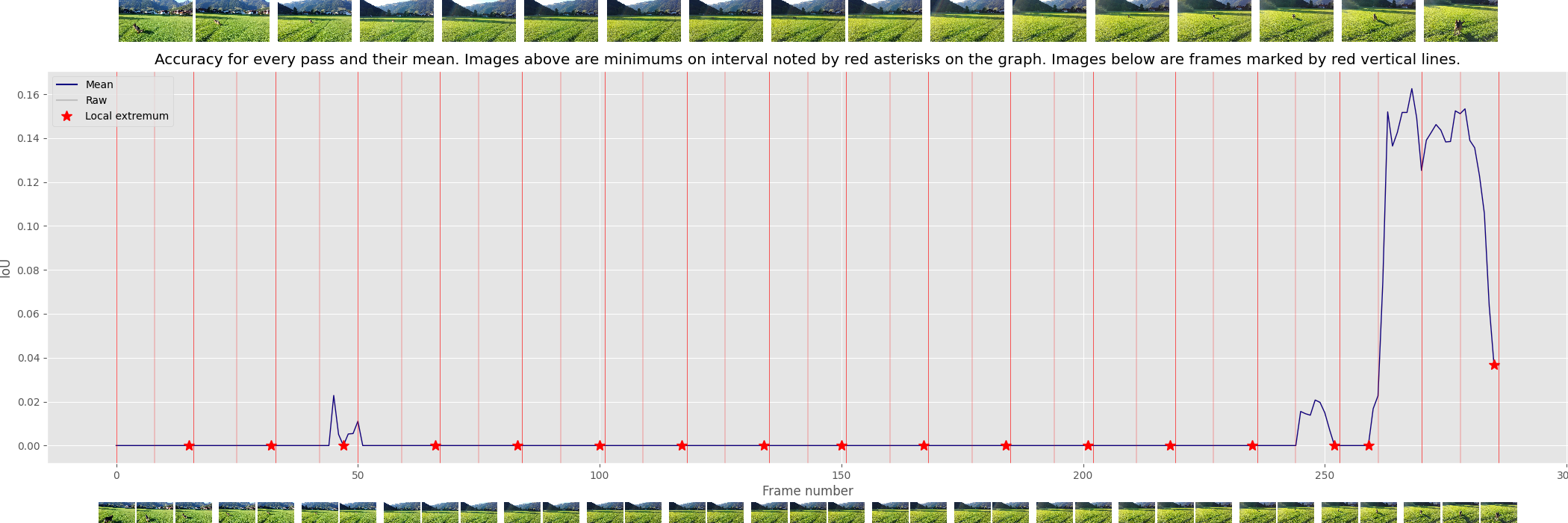

The tester enables benchmarking individual compute nodes and performing reliable verification of a detection and tracking system’s quality after making changes to the tracking /detecting algorithms, trained deep learning models, parameters of the policies, or the hardware. It can be used to show the overall tracking performance, prediction quality for a single frame, and speed of the policy used for a given video sequence. The project also includes a report generator which creates a well-structured html report correlating the results of algorithms with ground truth and overlaying them on specific interesting frames in the video to provide a means of establishing what approach is best for a given scenario.

How the system works

The tester can operate in two modes. In the first one, nicknamed fps mode, frames are sent at a preset speed (usually 60 FPS) irrespective of whether the algorithms respond with a bounding box around the tracked object or not. It can be used to verify how the tested algorithm performs in a real-life scenario, in real time and with possible hardware limitations that may impact the prediction’s quality. In the other mode, called wait mode, a frame is sent and the testing node waits for a response from the tested node before sending another frame. It measures the algorithms’ peak performance to suggest what hardware needs to be used to achieve that performance based on the response time measured by the tester.

The input video sequences and annotations are expected to be in the Amsterdam Library of Ordinary Videos (ALOV) format. A while back we developed an automatic detection and tracking dataset generator that greatly simplifies the process of making ALOV datasets.

Besides doing quality measurements, the tester node also utilizes the ROS stopwatch node to compute the time between sending the frame and receiving the prediction. What is more, it can be used in a CI pipeline to ensure that the quality of predictions improves over time and any issues are detected as early as possible. In addition, to demonstrate the usage of the tester we have created a policy examples repository that provides a step-by-step description on how to run policy testing on a sample sequence.

Easy scaling and testing

Our tester is based on ROS - a flexible robotics framework originally built for Linux that is also available for real time operating systems such as Zephyr RTOS, which opens up the possibility of scaling the compute resources involved, from small and power efficient MCUs to large and complex Linux devices. As members of both Linux Foundation and Zephyr Project we help companies build robotics systems with modular architecture that gives developers a lot of freedom in terms of adjusting it to specific usages or hardware. With ROS we can design an infrastructure consisting of multiple algorithm nodes, which can be easily replaced with bleeding-edge solutions or anything that is most suitable to the task at hand. ROS allows distributing nodes across multiple machines, so the whole system solution is not limited to one device, e.g. heavy computations or policy logic can be executed on one device, frame fetching can be performed on another device, and control of effectors can be executed on yet another device.

Antmicro offers flexible hardware, software and AI engineering services and develops tools to help businesses move faster in the edge AI space. If you are building an AI-capable device to automate operations in your business, or to offer new capabilities to your customers and users, don’t hesitate to reach out to us at contact@antmicro.com to find out how we can help you achieve your goals.