Based on internal R&D efforts, research projects like VEDLIoT and practical applications in a variety of customer cases, Antmicro is constantly working on improving Kenning, its open source framework for creating deployment flows and runtimes for Deep Neural Network applications on embedded hardware platforms. The AI toolkit is now gaining additional flexibility through highly customizable, modular blocks for developing real-time runtimes and automated search for best parameters for the optimization and compilation process for DNN models.

This note describes two improvements to Kenning: KenningFlow, which makes prototyping and development of applications utilizing DNNs significantly easier, and GridOptimizer, which facilitates finding best-fitting sets of frameworks and optimizations by evaluating diverse scenarios in an automated manner.

New Kenning runtimes

The original implementation of runtimes in Kenning, described in a previous blog note, was limited to a single input source called DataProvider (wrapping e.g. camera handling, TCP connection for input data, or readouts from other sensors), a single optimized model, and allowed to connect multiple outputs called OutputCollectors (display on screen, save to file, send predictions via TCP, etc.). Typical AI-based application flows, however, involve multiple input sources as well as multiple processing blocks and models in order to be able to, e.g. perform text detection and recognition, object detection and tracking, and more. With the recent advances in block support validation, JSON-based compilation description, and improved IO specification management, we were able to address these shortcomings by introducing the KenningFlow class.

KenningFlow uses the same mechanisms as compilers and optimizers, i.e. JSON-based definition of ModelWrappers, Runtimes and other blocks. A KenningFlow consists of Runners, which are wrappers for specific actions in the runtime. It can wrap existing Kenning components and run their methods, or implement an entirely new processing flow that is specific to a given scenario, e.g. thresholding and extraction of interesting objects using classic computer vision algorithms before passing them to the model.

Each Runner uses a specified format of inputs and outputs for validating a runtime specification prior to execution. In most cases, the specification of inputs and outputs is a matter of specifying the shape of a tensor (size of dimensions, type of values), but it can also specify that a Runner expects a list of objects holding Object Detection data (for example for visualization purposes).

Such specification of inputs and outputs is later used for validation of the defined runtime to check if it works correctly.

The currently available Runners can be used for:

- obtaining data from sources -

DataProvider, e.g. iterating files in the filesystem, grabbing frames from a camera or downloading data from a remote source. - processing and delivering data -

OutputCollector, e.g. sending results to the client application, visualizing model results in GUI, or storing results in a summary file. - running and processing various models -

ModelRuntimeRunnerwhich takes the ModelWrapper and Runtime specifications to create an optimized runtime for the model and uses its specification and processing of inputs and outputs to process incoming data and return predictions. - applying other actions, such as additional data analysis, preprocessing, packing, and more.

Here, you can see an example of a flow definition:

[

{

"type": "kenning.dataproviders.camera_dataprovider.CameraDataProvider",

"parameters": {

"video_file_path": "/dev/video0",

"input_memory_layout": "NCHW",

"input_width": 608,

"input_height": 608

},

"outputs": {

"frame": "cam_frame"

}

},

{

"type": "kenning.runners.modelruntime_runner.ModelRuntimeRunner",

"parameters": {

"model_wrapper": {

"type": "kenning.modelwrappers.detectors.yolov4.ONNXYOLOV4",

"parameters": {

"model_path": "./kenning/resources/models/detection/yolov4.onnx"

}

},

"runtime": {

"type": "kenning.runtimes.onnx.ONNXRuntime",

"parameters": {

"save_model_path": "./kenning/resources/models/detection/yolov4.onnx",

"execution_providers": ["CUDAExecutionProvider"]

}

}

},

"inputs": {

"input": "cam_frame"

},

"outputs": {

"detection_output": "predictions"

}

},

{

"type": "kenning.outputcollectors.real_time_visualizers.RealTimeDetectionVisualizer",

"parameters": {

"viewer_width": 512,

"viewer_height": 512,

"input_memory_layout": "NCHW",

"input_color_format": "BGR"

},

"inputs": {

"frame": "cam_frame",

"detection_data": "predictions"

}

}

]It is a list of the following Runner blocks:

CameraDataProvideris aDataProviderthat provides frames from a camera or a video source. Here, we configure the image data layout and resolution, and set the name of the published frames tocam_frameModelRuntimeRunnertakes theModelWrapperand theRuntimeobjects defining data processing for YOLOv4 as well as inference runtime for YOLOv4 in ONNXRuntime. The Runtime usesCUDAExecutionProviderto run the model entirely on GPU. TheModelRuntimeRunnertakes the input frames fromcam_framedelivered byCameraDataProvider. It also returns predictions under the rather self-explanatorypredictionsname.RealTimeDetectionVisualizeris a GUI application based on dearpygui which visualizes object detection results. It takes inputs from two sources -cam_framefor frames checked by the model, andpredictionsfor detected bounding boxes.

Save the above JSON file as runtime.json.

With this, you can run (assuming Kenning is installed or that we are located in the cloned repository):

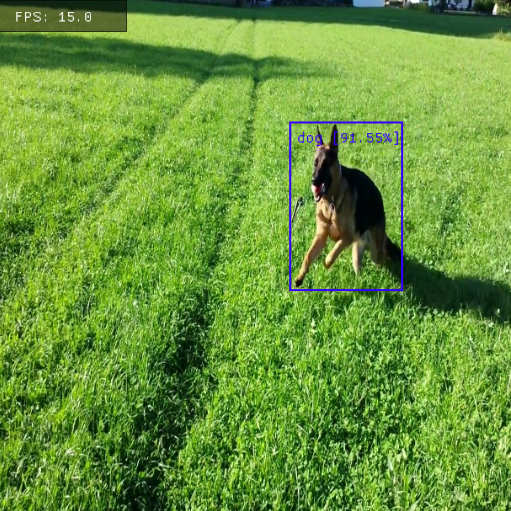

python -m kenning.scenarios.json_flow_runner runtime.jsonIt should start the flow and display the results on screen, as shown below:

KenningFlow is a great prototyping tool for advanced applications utilizing deep neural networks, which can be used in deployment on some more powerful, Linux-based platforms, such as the NVIDIA Jetson family, including NVIDIA Jetson AGX Orin discussed in an introductory note on Antmicro’s blog and in a blog note announcing Antmicro’s Jetson Orin Baseboard. Thanks to its flexibility, it can act as a base for highly customizable applications, where the chain of operations changes dynamically per use case.

Automatic search for best parameters and optimizations

Kenning already does a lot to automate and simplify the model optimization process:

- Provides a formalized, serialized way to store a definition of an optimization pipeline along with measurements used to sum up a model both in terms of quality and performance

- Provides ready-to-use blocks for optimizing a model and runs it using the optimized runtimes, even remotely, using the measurements mentioned above

- Provides methods to generate a textual and graphical summary of models that can be easily embedded in Sphinx-based documentations, and Markdown-based documents

- Provides methods to visually compare models to facilitate the process of selecting optimal solutions, as described in Antmicro’s recent blog note.

Some of the largest hurdles that remained, though, are the length of the neural network optimization process and the necessity to tweak parameters and available blocks manually. In order to address these, we equipped Kenning with a GridOptimizer class, which you can use to customize the list of optimization blocks used and the list of parameter values to be tested. GridOptimizer runs the optimization process by checking combinations of provided optimizations and parameters, which it then uses to produce evaluation results which the user can then use to create a comparison report.

Here, you can see a sample grid specification:

{

"optimization_parameters":

{

"strategy": "grid_search",

"optimizable": ["optimizers", "runtime"],

"metric": "inferencetime_mean",

"policy": "min"

},

"model_wrapper":

{

"type": "kenning.modelwrappers.classification.tensorflow_pet_dataset.TensorFlowPetDatasetMobileNetV2",

"parameters":

{

"model_path": "./kenning/resources/models/classification/tensorflow_pet_dataset_mobilenetv2.h5"

}

},

"dataset":

{

"type": "kenning.datasets.pet_dataset.PetDataset",

"parameters":

{

"dataset_root": "./build/pet-dataset"

}

},

"optimizers":

[

{

"type": "kenning.compilers.tflite.TFLiteCompiler",

"parameters":

{

"target": ["default"],

"compiled_model_path": ["./build/compiled_model.tflite"]

}

},

{

"type": "kenning.compilers.tvm.TVMCompiler",

"parameters":

{

"target": ["llvm", “llvm -mcpu=avx2”],

"compiled_model_path": ["./build/compiled_model.tar"],

"opt_level": [3,4],

"conv2d_data_layout": ["NHWC", "NCHW"]

}

}

],

"runtime":

[

{

"type": "kenning.runtimes.tvm.TVMRuntime",

"parameters":

{

"save_model_path": ["./build/compiled_model.tar"]

}

},

{

"type": "kenning.runtimes.tflite.TFLiteRuntime",

"parameters":

{

"save_model_path": ["./build/compiled_model.tflite"]

}

}

]

}Firstly, we specify the optimization parameters:

"optimization_parameters":

{

"strategy": "grid_search",

"optimizable": ["optimizers", "runtime"],

"metric": "inferencetime_mean",

"policy": "min"

},The specification includes the following:

strategy- strategy for searching,grid_searchmeans a full search of all available combinations in the flowoptimizable- determines which components of the optimization pipeline can be disabled and enabled during a combination check. In this case, listingoptimizersandruntimemeans that we will check combinations of all components in their respective listsmetric- determines which metric is the main factor considered during model comparison, in this case it is the mean model inference timepolicy- determines the aim of the optimization regarding themetricprovided, in this case we minimize the mean inference time

Then, there are blocks that are common for any optimization flow described in our previous blog notes:

model_wrapper- describing the model used for the taskdataset- for evaluation purposesoptimizers- defining possible steps for model optimizationruntime- defining possible runtimes to use

What is different in these blocks now is that:

- for

optimizersandruntime, we specify a list of possible blocks that can be used for optimizing and running the model. Due to the fact that we describedoptimizersandruntimeas optimizable inoptimization_parameters,GridOptimizerchecks all possible combinations of given optimizers and runtimes to determine which combination yields the best results. - for some of the parameters, instead of providing an actual value, we provide a list of values. E.g., for

kenning.compilers.tvm.TVMCompiler, in theconv2d_data_layout, we specify NHWC and NCHW data layouts, inopt_levelwe specify levels 3 and 4, and providellvmandllvm-mcpu=avx2as targets.GridOptimizerwill check all combinations of given parameters in order to find the best possible combination from the inference time perspective.

To start GridOptimizer, save the above configuration in a grid-optimizer-example.json file and run (assuming you have Kenning installed or cloned in the current directory):

python3 -m kenning.scenarios.optimization_runner

grid-optimizer-example.json

build/tvm-tflite-pipeline-optimization-output.json

--verbosity INFOThis will run all combinations of the above blocks and their parameters and sum it up in the JSON file.

Automate pipeline optimization and create advanced edge AI applications with Antmicro

As illustrated in the examples above, Antmicro’s latest additions to Kenning allow you to fine-tune your DNN workflows as well as create pipeline-based state-of-the-art edge AI applications and use them on advanced platforms such as NVIDIA’s Jetson Orin Nano.

If you are interested in using Kenning for your particular use case, or you would like to discuss the DNN and edge AI software and hardware possibilities Antmicro offers, do not hesitate to reach out at contact@antmicro.com.