Evaluating and deploying ROS 2 nodes for real-time AI computer vision with Kenning

Published:

Topics: Edge AI, Open machine vision, Open software libraries, Open source tools

For complex ML systems involving many sensors (e.g. cameras, depth sensors, accelerometers), interconnected algorithms and a sophisticated control flow, system performance monitoring is a challenging problem. To architect these kinds of complex systems in a modular and scalable way, we usually use ROS 2 as it offers asynchronous and parallel execution of independent tasks by a distributed system of nodes. This article will present two new ROS nodes developed by Antmicro to simplify the deployment and performance evaluation of complex ML systems using our open source Kenning AI framework.

ROS 2 lets you instantiate dedicated nodes for managing sensors, control flow, individual AI models, effectors and displays, which can be easily dispersed across many devices, communicating with each other in server-client, publisher-subscriber and action manners.

Production scenarios often involve multiple models working in parallel along with data collection and transfer with their own time and compute constraints which can hinder performance in real-life setups, e.g. when tracking objects moving at higher speeds. In such complex cases, we must ensure data fetching, processing, displaying, etc. of one model does not affect another by limiting available computational resources, memory, or obstructing I/O management.

Below we introduce two new ROS 2 nodes, CVNode and CVNode Manager, which let developers of ROS-based systems reliably evaluate models and manage vision pipelines in the context of entire, dynamically operating AI applications, we describe their functionality in detail, and provide a real-time processing instance segmentation demo in two separate runtimes with metrics for performance comparison.

Real-time ROS 2 application evaluation infrastructure with CVNode and CVNode Manager

Last year, we open -sourced ros2-camera-node, a tool that enables control over cameras and reading frames using our grabthecam library, and the ros2-gui-node library for creating fast and GPU-enabled graphical interfaces with Dear ImGui containing ready-to-use, hackable widgets that can easily be attached to existing ROS 2 topics, services and actions. Building on top of those tools and the Kenning framework, we now are able to provide real-time performance evaluation of ROS 2 applications.

Our first new addition to the ROS 2 ecosystem is the CVNode (Computer Vision Node) template which introduces a base class for implementing ROS 2 nodes encapsulating computer vision models, e.g. for classification, object detection or instance segmentation. CVNode comes with examples of instance segmentation nodes utilizing YOLACT and MaskRCNN models. CVNode provides basic methods for the following processes and requires them to be implemented in the application under test:

- Loading / releasing models (prepare / cleanup services)

- Input and output processing (process service)

- Running inference (process service).

Other than using ROS 2, CVNode is technology-agnostic.

For managing instances of CVNode and other nodes in a computer vision algorithm, we are also introducing CVNode Manager. This node enables and disables other nodes, acts as a middleman for communication with the rest of the system, and allows for evaluation of vision nodes using Kenning which collects all necessary performance and quality metrics, as well as provides test data on CVNode Manager’s demand. In general, provided that a node (not necessarily CVNode Manager) is compliant with the types of messages supported by Kenning, the adoption of the benchmarking/evaluation flow is just a matter of listening to the right topics/services.

These additions provide a means for evaluating the quality of models, as well as complete ROS 2 systems with Kenning, against regular and time-constrained testing flows described further down.

By default, Kenning integrates with ROS 2 applications through a ROS 2 action that passes:

- Input data from Kenning to CVNode Manager

- Results from a tested node/application to CVNode Manager and Kenning

- Execution statistics (inference time) from CVNode Manager to Kenning.

We can also attach a previously described GUI Node to enable visualization of predictions in the loop.

Measuring real-life model performance and quality

When it comes to testing models or entire applications for deployment purposes, we can come up with two basic scenarios:

“Synthetic” environment

This is a typical scenario where data is delivered only when the application is ready to process it. This scenario depicts how our application or model would behave in the “perfect” environment with the model able to process every single request (e.g. when it runs on a high-performance machine). It can be considered a determinant of quality provided that our hardware is capable of processing any incoming requests.

”Real-world” environment

In a “real-world” environment, each input sample is delivered at a certain rate, independent of model processing; this scenario is closer to what usually happens in production. If the input data rate is high, and the application fails to provide responses within the assigned time window, then we either skip the response for a given input sample, provide an earlier response or an approximation of the potential response based on past responses. In cases where changes in incoming inputs are slow-paced (fast delivery of samples with a slowly or rarely changing environment), reusing past responses may be sufficient and will provide credible results. However, if the contents of incoming samples are highly dynamic, then even approximated responses may not be able to make up for the sluggishness of the model. In such situations, using a faster model, even with noticeably lower quality compared to a slower model, may result in better performance.

Demo: Real-time ROS 2 processing and evaluation with Kenning

Using the tutorial below, you will be able to run inference testing of the YOLACT instance segmentation model executed on the Lindenthal dataset on a CPU in a “real-world” scenario using two runtimes:

At the end of the demo, an inference testing report is constructed comparing the models for performance and accuracy.

Environment preparation

Note that this particular demo requires an NVIDIA GPU and driver as it uses the NVIDIA Container Toolkit.

To prepare the environment, you need to create a workspace directory, download the demo-helper script and run workspace preparation:

mkdir workspace && cd workspace

wget https://raw.githubusercontent.com/antmicro/ros2-vision-node-base/main/examples/demo-helper

chmod +x ./demo-helper

./demo-helper prepare-workspace

From this point, start the Docker image with the GUI enabled using:

./demo-helper enter-docker

And in the container, source the environment:

source ./demo-helper source-workspace

Running the ONNX model

The easiest runtime to use for the model is ONNX Runtime, since the model does not require any modifications:

ros2 launch cvnode_base yolact_kenning_launch.py \

backend:=onnxruntime \

model_path:=./models/yolact-lindenthal.onnx \

measurements:=onnxruntime.json \

report_path:=onnxruntime/report.md

To check the quality of a model in a scenario with time constraints, we can use the scenario and inference_timeout_ms arguments. The former argument specifies whether we want to pick a real_world_first or real_world_last scenario, and the latter argument determines the period between consecutive frames.

An example call looks as follows:

ros2 launch cvnode_base yolact_kenning_launch.py \

backend:=onnxruntime \

model_path:=./models/yolact-lindenthal.onnx \

measurements:=onnxruntime-rt-300.json \

report_path:=onnxruntime-rt-300/report.md \

scenario:=real_world_last \

inference_timeout_ms:=300

Compiling the model for TVM

You can also compile models for inference.

For example, the TVM model can be compiled by running:

kenning optimize --json-cfg src/vision_node_base/examples/config/yolact-tvm-lindenthal.json

The built model will be placed in the build/yolact.so path.

It can then be tested with:

ros2 launch cvnode_base yolact_kenning_launch.py \

backend:=tvm \

model_path:=./build/yolact.so \

measurements:=tvm.json \

report_path:=tvm/report.md

Just as above, we can introduce the scenario and inference_timeout_ms arguments to this call.

Comparison of execution

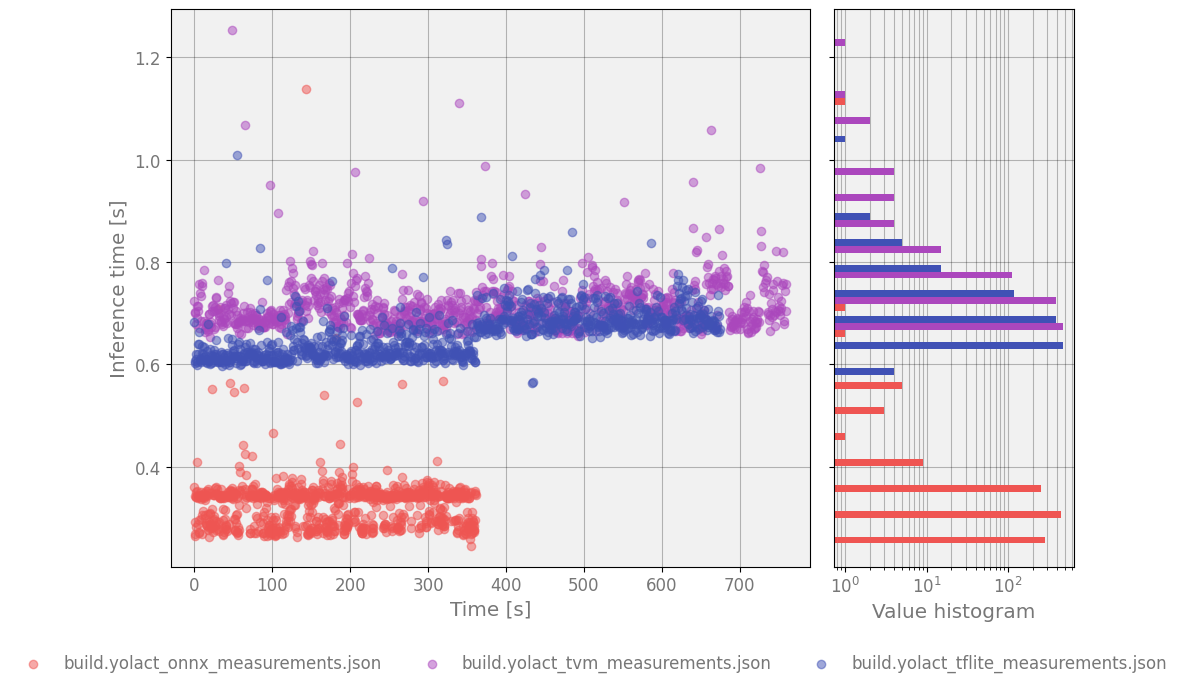

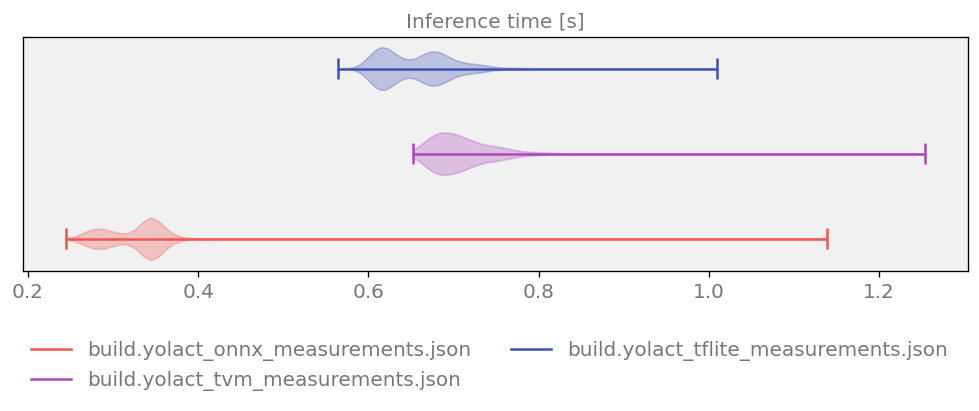

You can compare results for different runtimes using:

kenning report --measurements \

onnxruntime.json \

tvm.json \

--report-name "YOLACT comparison report" \

--report-path comparison-result/yolact/report.md \

--report-types performance detection \

--to-html comparison-result-html

This will render a comparison report under comparison-results-html, granting access to metrics like inference time, inference time over time or recall-precision curves.

Adapting Kenning for existing projects

To connect Kenning to existing projects, in many cases you can simply attach it using ROS 2 actions implemented in Kenning’s ROS2Protocol, as mentioned above. To adapt the messages received from the existing project to your needs, use the DataConverter class for Kenning, mapping predictions from external tools to Kenning’s representation of data. In the demonstration above, we used ROS2SegmentationDataConverter, which converted the ROS 2 message with instance segmentation messages to Kenning’s SegmObject objects and vice versa.

Adopting other models of communication (services, topics) can be easily introduced at the RuntimeProtocol level, which allows us to add tests for time-constrained image processing pipelines to existing applications fairly easily.

End-to-end evaluation of ROS 2 nodes and solutions

ROS 2 is the go-to open source solution for controlling and monitoring distributed, multi-node systems, and Antmicro is continuing the work to further integrate the framework with its open source edge AI toolkit.

In the future, we plan on improving CVNode Manager to e.g. load multiple models to solve a single problem and then produce a final prediction using aggregated results based on a certain policy (e.g. weighted boxes fusion). We also want to further simplify the process of attaching Kenning to existing applications for benchmarking/evaluation purposes, e.g. by supporting more communication models.

If you are interested in expanding your existing computer vision AI setups or building transparent, thoroughly tested, scalable solutions with full control over the entire stack, reach out to Antmicro at contact@antmicro.com to discuss your project’s needs.