Capturing and processing signals in machine vision systems inevitably involves video latency, which is a natural consequence of the multi-step data acquisition and transformation process. In real-time applications, minimizing latency is a critical issue that must be addressed to ensure proper system operation, good user experience, and, in some cases, even safety.

In the case of user-operated systems, such as HUD or AR/XR, this latency can be defined as the time between the moment a real-life image is being projected on the camera sensor, and the moment when its representation is provided to the user on a display - so called photon-to-photon latency. For autonomous machine vision systems, e.g. involving environment mapping, the equivalent is camera-to-decision latency, i.e. the time between real-life image, and obtaining its logical representation that can be acted upon.

In mobile vision systems, employed e.g. for object detection, spatial awareness, navigation or monitoring, the latency of the image processing in relation to camera movement also comes into play. This factor, called motion-to-photon latency, can also be measured and minimized.

In this article we present an open source-driven methodology for evaluating video latency in machine vision systems such as UAVs, autonomous cars, industrial robots or HMI systems such as AR/XR headsets. Using our recently released open hardware Video Latency Testing Probe and LED Panel Board, we will show how this approach can be applied in various training and assessment scenarios.

Open hardware video latency testing setup

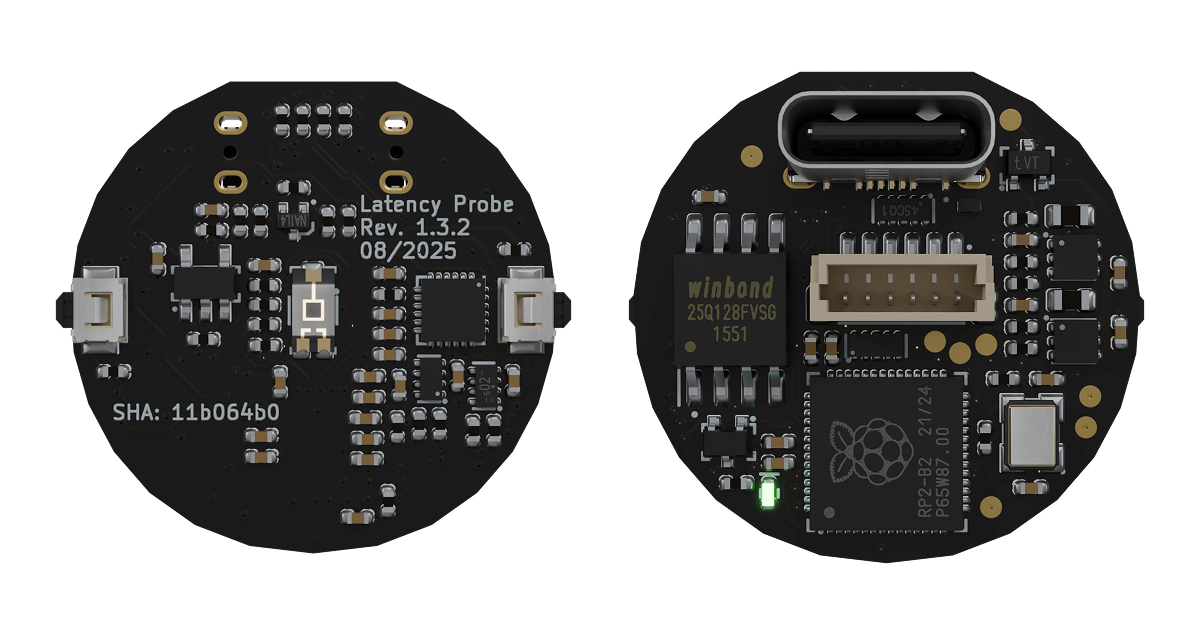

The Video latency testing probe was designed for latency testing of video systems combining cameras, displays and light sources. It consists of the following elements:

The MCU can be programmed with firmware that reacts to the light pulses detected by the sensors, measures the time elapsed between pulses, collects the readouts and passes them to a PC. By combining the IMU (inertial measurement unit) readouts, the probe can be used on a platform that moves with respect to the light sources, allowing for measuring motion-to-photon latency. Additionally, thanks to its small size and an optimized mechanical outline, the probe can be installed directly in a DUT (device under test).

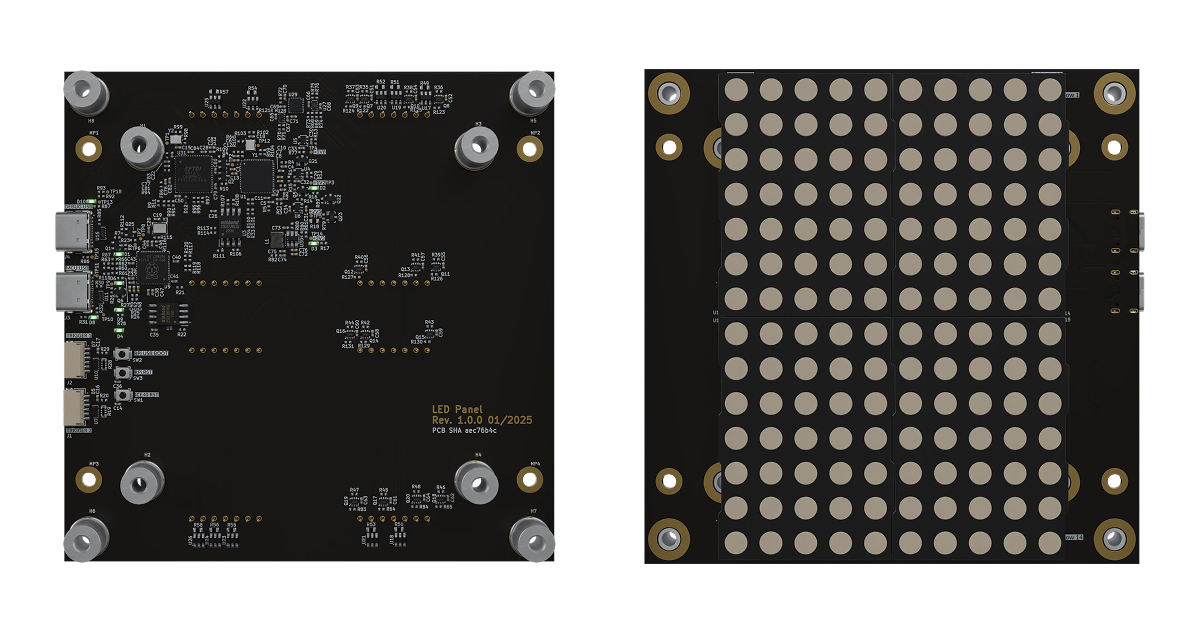

The second platform in Antmicro’s video latency testing setup is a Programmable LED Panel Board. Designed for photon-to-photon testing scenarios, it features a 10x14 LED matrix of individually controllable LEDs presenting test patterns in a time-controlled manner. The board offers two options for LED control, either with a Raspberry Pi RP2040 MCU for simple scenarios, or a Lattice ICE40UP5K FPGA for scenarios requiring fast sweeping of the LED matrix. The custom patterns displayed by the LED Panel Board can also be used for training and evaluation of devices using machine vision for environment mapping, such as XR goggles or mobile robots.

Both the Programmable LED Panel and the Video Latency Testing Probe are available on Antmicro’s System Designer portal, along with detailed component lists and HBOMs.

Video latency measurement and estimation becomes a key factor when dealing with complex systems and devices that combine video input with on-board video processing. In typical integration scenarios, latency measurement allows optimizing the video processing pipeline, and Hardware-In-the-Loop testing enables tracking potential regressions. Gathering enough data about latency changes with respect to different conditions (exposure time, ambient light intensity, ISO sensitivity) allows introducing some predictive algorithms that can further reduce the processing latency, and minimize the reaction time of the system as a whole.

Combining video latency measurement with audio latency measurement (which we described in more detail in a recent article), allows developing and benchmarking complete devices integrating both audio and video for advanced HMI applications, such as XR/AR headsets and personal assistants.

Photon-to-photon testing

The idea behind photon-to-photon measurement is to display a simple yet precisely timed pattern on the LED panel (this could be an LED-chasing string which turns on the subsequent LEDs on the panel; in such a case each new LED is lit after a defined time interval). The DUT captures an image of the LED panel with its camera, processes it and displays it on its output screen. The measurement setup features another camera (i.e. a measurement camera), preferably with very short exposure time, facing the DUT screen and triggered synchronously to the LED panel pattern change.

The frames captured by the measurement camera can then be analyzed with image processing software (such as OpenCV) in order to detect those parts of the LED matrix image that were lit. After comparing the frame numbers, the framerate of the measurement camera and the interval of switching the subsequent LEDs, it is possible to construct a repeatable, scriptable and autonomous algorithm for measuring the DUT’s video latency, which Antmicro also develops for its customers tailored for their use cases.

This approach makes the latency measurement setup easily adoptable for Hardware-In-the-Loop testing scenarios where the DUT can be automatically tested for regressions in a flexible CI environment.

Motion-to-photon testing

Motion-to-photon latency measurement scenarios require that the DUT is placed on a moving platform, whose temporary position can be precisely read with low latency. In case of Antmicro’s setup, it is done with an IMU present on the Video Latency Testing Probe. The DUT processes its own position, typically with an algorithm involving fusion of the video input and its own inertial sensors, and displays a representation of the assumed position on its display, encoded as screen brightness. The Video Latency Testing Probe features a low-latency photodiode which can read this information. By comparing phase shift between the readings of the probes’s IMU and photodiode, we can estimate the DUT’s motion-to-photon latency.

Develop full-stack machine vision systems with Antmicro

Antmicro offers comprehensive services for building full-stack machine vision systems that typically integrate high resolution and high framerate imagers, high bandwidth video transmission and FPGA + GPU + CPU driven processing. The high-speed image capture is often combined with custom AI solutions for autonomous and real-time processing. We implement such technologies for high-end medical devices, telesurgery, smart city infrastructure, mobile robots, drones, cube satellites or custom-made scientific equipment.

If you’re building a next-gen device and need help with selecting the optimal video processing solution or integrating it with your system, don’t hesitate to reach out to us at contact@antmicro.com.