Tensorflow Lite in Zephyr on LiteX/VexRiscv

Published:

Topics: Open source tools, Open OS, Edge AI, Open FPGA

While much of the focus for the recent developments in AI has been on cloud-centric implementations, there are many use cases where AI algorithms have to be run on small and resource constrained devices. Google’s TensorFlow Lite, a smaller brother of one of the world’s most popular Machine Learning frameworks, is focused on exactly that - running neural network inference on resource constrained devices. A more recent but very exciting effort, led by Pete Warden’s team at Google in collaboration with partners like ARM, Ambiq Micro, Sparkfun and Antmicro, aims to bring TF Lite to microcontrollers, with sub-milliwatt power envelopes and sub-dollar unit costs for an AI-enabled node.

Antmicro’s Renode and Google’s TF Lite teams have been collaborating to use Renode for demonstration and testing of the ML framework, and bring it to new frontiers with real industrial use cases. Last year, we helped port the framework to RISC-V, the open ISA which encourages collaborative software-driven hardware innovation. For this year’s RISC-V Summit, we are announcing a next step in bringing TF Lite to play well with existing standards: TF Lite micro for the Zephyr RTOS on LiteX/VexRiscv.

Why is LiteX and FPGA a good platform for TF Lite?

The recently released TensorFlow Lite port to Zephyr for LiteX/VexRiscv presents a proof of concept implementation of TF Lite running on a small soft CPU-based system in FPGA.

FPGA devices are often used to accelerate parallel data processing, including deep neural network inference. Their dynamic reconfiguration capability is a good match to the changing needs of ML, where new advancements in the software space happen too fast for traditional hardware approaches to adapt. With the availability of small FPGAs that are freely programmable with open source tooling, also extremely low-power applications like the ones encouraged by TF Lite for MCUs can be targeted. The reconfigurability of FPGA devices is an interesting aspect, as new hardware configurations can be deployed to existing devices to get the best of the latest ever-evolving ML applications.

On the other hand, real neural network inference applications do require some sequential processing of data and top-level code controlling the flow. In the FPGA world, the way to solve this problem would be to implement a soft CPU core, and that’s what we have done for dozens of applications, with a standardized soft SoC solution called LiteX and an excellent RISC-V CPU implementation that plays well with LiteX, VexRiscv. LiteX comes with wide FPGA platform support that we actively help develop, and a variety of I/O options, starting with the UART, SPI or I2C, but also covering Ethernet, PCIe, USB and SATA for larger systems. It has been our soft SoC of choice, and we have implemented support for it in our open source simulation framework, Renode, as well as both Zephyr and Linux.

In the TF Lite context, LiteX combines the best of both worlds - as it enables a system designer to easily create a soft CPU based SoC in FPGA and focus on the capability to extend it with custom, ML-oriented blocks.

Why Zephyr?

Zephyr is a small but powerful RTOS capable of running on resource constrained devices. POSIX compatibility allows easy integration of existing code bases, and baked-in vendor neutrality ensures the RTOS will work well RISC-V’s open and collaborative nature. Throw in clear licensing, powerful tooling and the most dynamic growth in the RTOS space, and you have a natural winner.

Antmicro is actively developing Zephyr support for RISC-V in general and LiteX/VexRiscv in particular, so porting Tensorflow Lite to Zephyr and running it on a Litex/VexRiscv system was a natural choice.

Demos

Two demos have been prepared to present the functionality of the system:

- A simple “Hello World” Tensorflow lite application which prints sine function values on the serial terminal,

- A “Magic Wand” application which recognizes different shapes “drawn” in the air with the board; the demo collects data from the accelerometer and feeds it to a neural network performing gesture recognition.

The system is composed of three main components. All the parts have been released to public repositories on Antmicro’s GitHub:

To make the build process easier, a master repository has been created linking the other repositories as submodules.

To set up the build environment and build the demos, follow the instructions found in the README file located in the master repository.

Running the demos

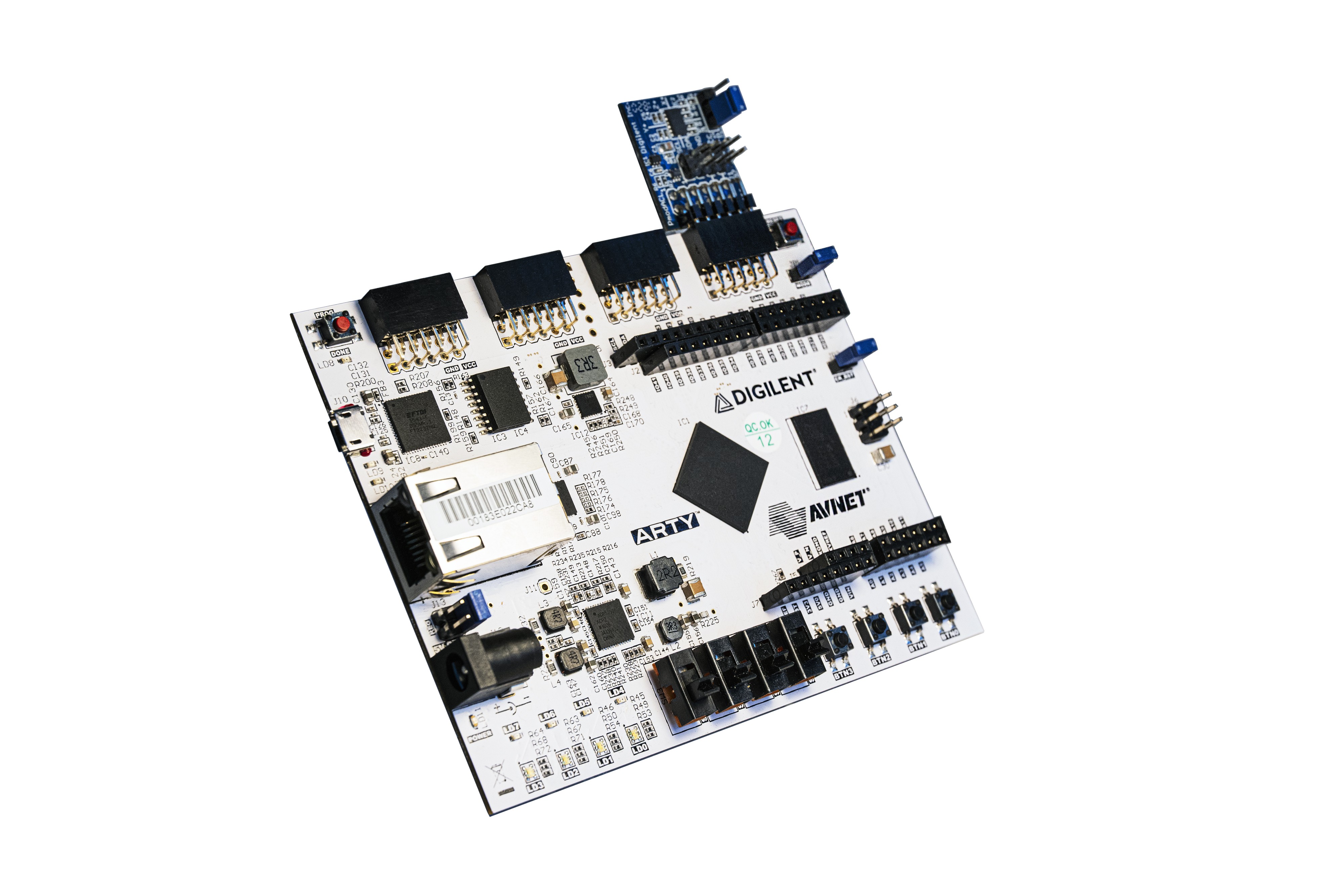

The demos can be run either on hardware or in the Renode simulator. Running on hardware requires a setup with the Digilent Arty A7-35T board with PmodACL connected to the JD port.

The onboard FPGA chip has to be programmed with gateware bitstream. After the board has been programmed, the LiteX System will boot. On a serial console you should see a Litex Bios prompt. In order to run the demo, the compiled application binary has to be uploaded to the device. The binary can be uploaded to the device using the serial connection with the litex_term tool.

The application starts as soon as it is uploaded. The “Magic Wand” application will print a welcome message while waiting for the accelerometer data. As soon as a gesture is detected, the application will print the results. See the listing below for an example of the output of the application:

Got id: 0xe5

*****

Booting Zephyr OS build v1.7.99-22021-ga6d97078a3e2

*****

Got accelerometer

RING:

*

* *

* *

* *

* *

* *

*

SLOPE:

*

*

*

*

*

*

*

* * * * * * * *

Running the demo in Renode does not require the physical board or fiddling with gateware. Detailed instructions on how to run the demo applications in Renode can be found in the master repository README file.

Near future plans

The demos present the functionality of the system and prove that TensorFlow Lite can be successfully run in Zephyr on a LiteX system with a VexRiscv CPU. However, additional work needs to follow for the code to be merged upstream. While the FPGA platform definition code - done as part of our earlier efforts - has been merged some time ago, Zephyr support for Tensorflow Lite and the Zephyr driver for the accelerometer used in the demo have not yet been added upstream. If you have an application which would benefit from running TensorFlow Lite with Zephyr, make sure you visit our booth at the RISC-V Summit or contact us at contact@antmicro.com.