At Antmicro, building advanced machine vision systems is our daily bread. While customer projects are always case-specific, we invariably strive to build solutions that employ leading edge computing platforms and, most importantly, are also scalable. This way we make sure that they can be migrated or expanded to next-gen technologies in a software-driven manner, while promoting modularity with the use of open source wherever possible.

Hence, over the years we have built entire families of thought-through, flexible camera platforms that can be easily adapted to specific projects and integrated to larger/existing systems by means of extra processing platforms, AI boosters, additional cameras, etc.

One such example is our 3D stereovision camera system, a Linux-driven high-speed hybrid FPGA+GPGPU computing platform for real-time machine vision in industrial environments, which we’re going to take a closer look at in this blog note.

High-speed processing at the very edge

Antmicro’s industrial stereovision platform, sometimes referred to as a 3D scanner, was originally developed as part of the X-MINE project - an innovative mobile mining system for real-time mineral analysis and sorting that started in 2017 and, following intensive development, is currently in a pilot phase at several mines across the EU (Sweden, Greece, Bulgaria and Cyprus), undergoing integration with on-site mining systems as the final stage of the project that will be concluded in 2021.

The scanner’s role in the X-MINE system is to determine the size of ore samples and to track their position as they quickly travel on a conveyor belt. The data regarding the objects’ dimensions and location on the belt, combined with an X-ray density map produced by a smart drill core analyzer developed by our Swedish partners at Orexplore, allows the system to recognize chunks of valuable minerals on the fly and sort them out at a pace defined by the mine plant.

Real-time 3D vision processing and endurance in harsh industrial environments were important aspects to keep in mind while developing the device. Contained in an Antmicro-designed IP43-class aluminum enclosure providing both mechanical protection and passive cooling, the platform can be used in challenging environments, of which mining sites are just an example.

Modular design for extensibility and endurance

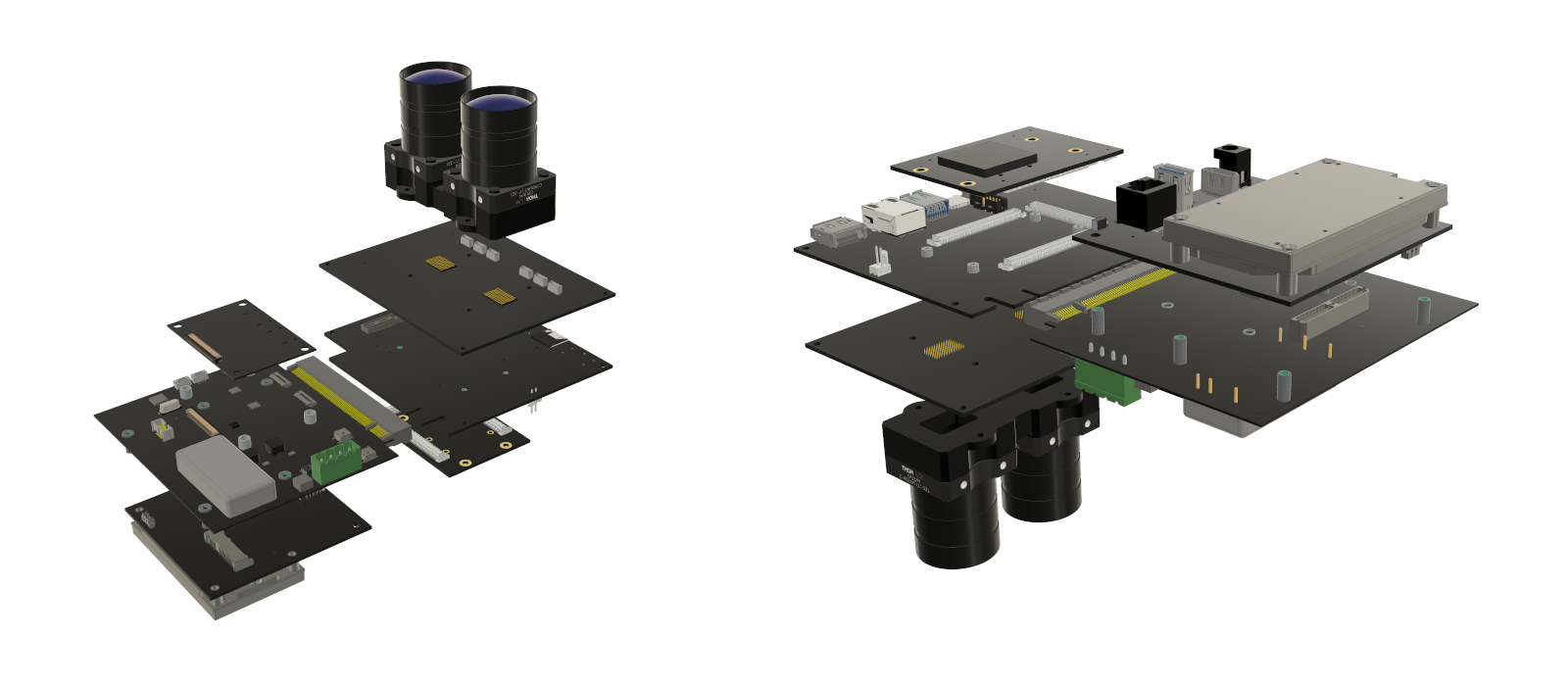

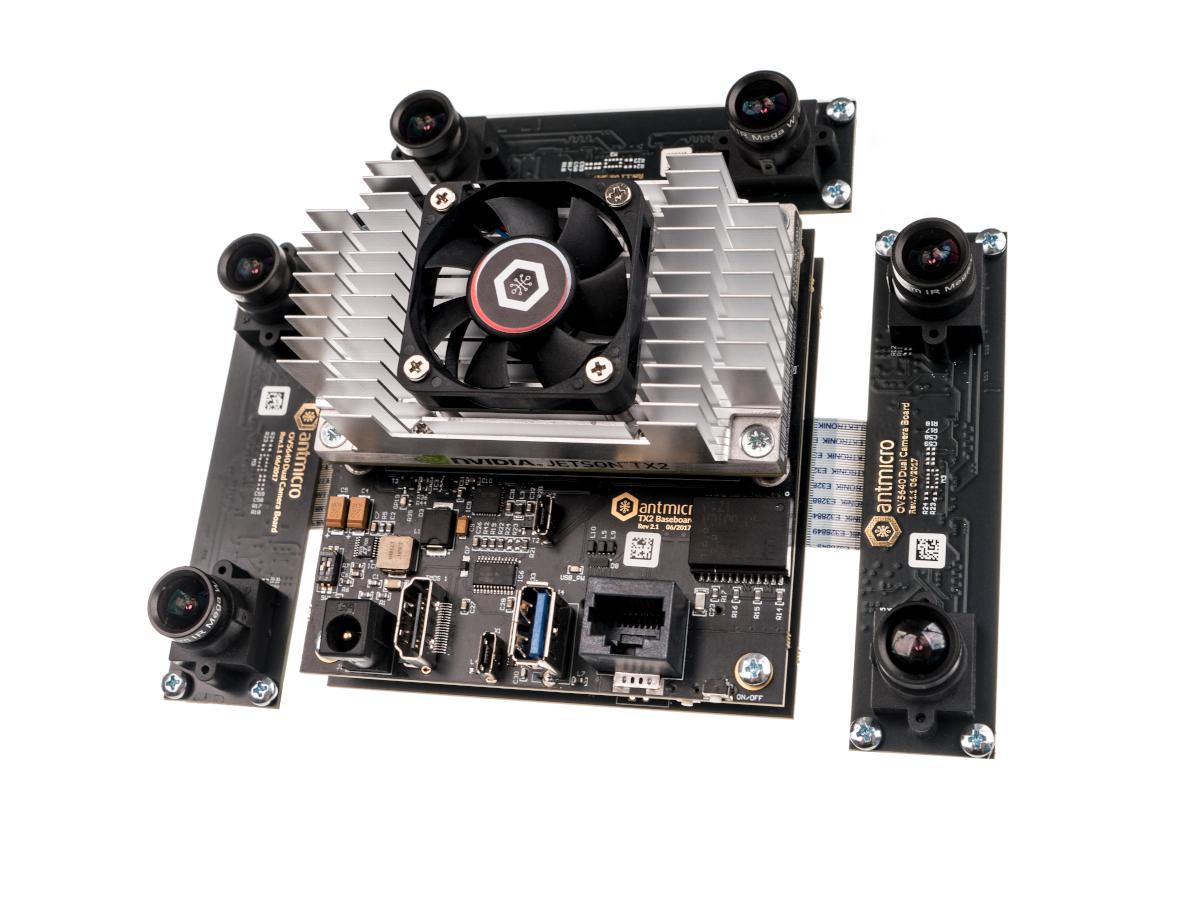

The smart 3D scanner comprises a stereovision camera module based on Antmicro’s UltraScale+ Processing Module, an FPGA with a quad-core Cortex A53 CPU for high-speed image processing, and our own TX2 Deep Learning baseboard that handles the AI-supported tracking feature.

The tracking module, originally based on the highly popular NVIDIA Jetson TX2 (featuring a quad-core Cortex-A57 and an NVIDIA GPGPU with 256 CUDA cores), can be further enhanced with the later Jetson Xavier or a different Jetson platform for extra computing power for on-site AI-assisted tasks. What is more, both computing units can be used simultaneously for pipeline video processing.

The camera features twin 16mm adjustable liquid lenses. The liquid lens technology involves encapsulating liquid in a flexible cell shaped by what is called the electrowetting effect. This ensures precise electronic adjustment of focus, without having to move any of the mechanical parts. And what is also important, liquid lenses are highly resistant to shock and vibration.

From stereovision & AI-assisted tracking to full-blown data fusion

The images from both cameras are pre-processed by our UltraScale+ MPSoC Processing Module in real time, whereas the bulk of the video processing is done in the CUDA-enabled NVIDIA GPGPU. At the very beginning of operation, the UltraScale+ MPSoC module trains the camera sensors by adjusting the time of data flow on each data lane, so that the signals arrive simultaneously to the FPGA in the UltraScale+ MPSoC module. Next, the FPGA merges the images from both cameras into one frame. The LVDS signal from the UltraScale+ MPSoC module is then converted into a MIPI CSI signal and passed on to the TX2 module where the subsequent stages of image processing take place. Having merged the simultaneously obtained images into one frame, the 3D scanner divides the frame back into two images, which constitute stereo input for further processing. The GPGPU on the TX2 module performs image rectification to ensure geometrical alignment of the images, and calculates a depth map of the observed area based on stereovision disparity, which is, in principle, the difference in the position of corresponding pixels between synchronously captured frames from each of the two cameras.

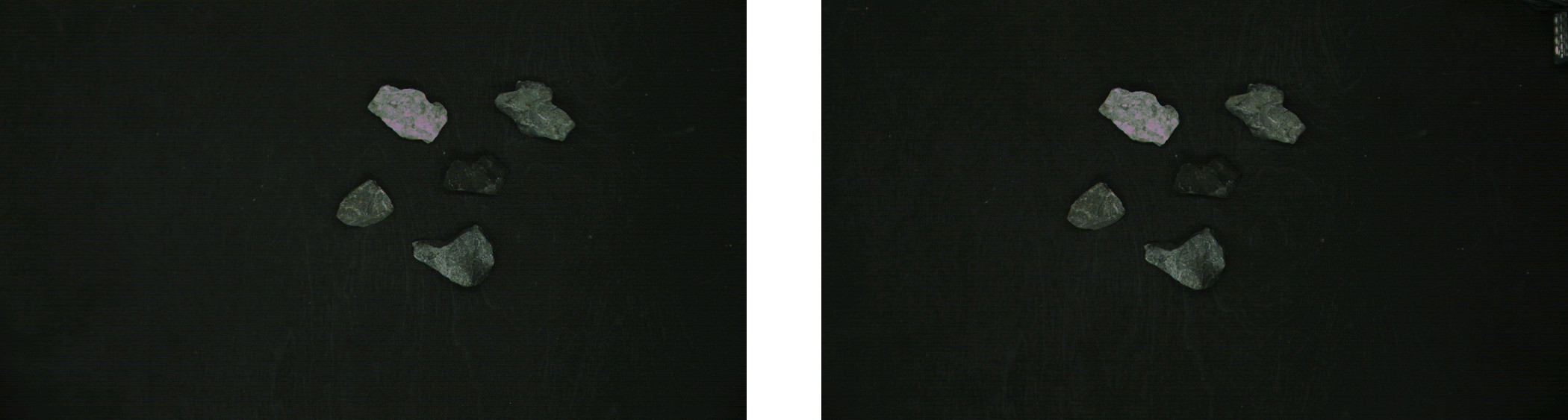

Stereo input from two cameras:

Rectified and overlaid images:

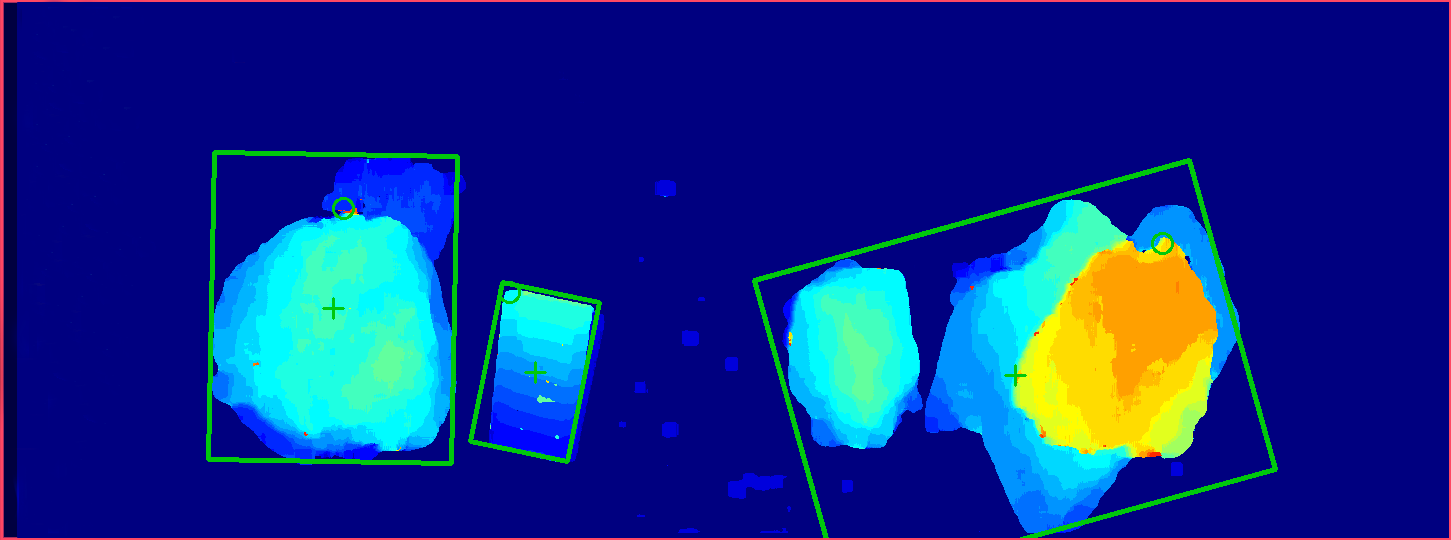

Disparity:

A dedicated algorithm then determines the relative height of objects by juxtaposing a pre-captured 3D plane - which represents the belt’s surface - against the obtained depth map. Object size and position are estimated by calculating a reprojection of the image through the optics of the cameras.

The scanner provides a live stream of the 3D depth maps as well as the position and size of objects tracked on the conveyor belt. The output data is provided as an XML-encoded TCP/IP stream to other components of the industrial system, such as the X-MINE’s data fusion computer, which combines the 3D data with X-ray images and other information from the rock sorting unit. The XML output stream consists not only of data regarding the position and dimensions of the samples, but also provides the parameters of the camera (such as focus, exposure, gain etc.), disparity algorithm parameters (that determine the accuracy of the depth map calculations) and detection parameters. This information helps perform analytics and adjustment of the settings to achieve the best tracking execution.

Our 3D scanner can capture stereo images on demand, which is an important feature for operation in an environment with multiple devices. Synchronous operation with external system components is ensured by the shutter control triggering stereovision image capture.

Fast, autonomous and ready to integrate

Antmicro’s 3D scanner generates 20 independent depth maps per second, which makes it capable of visualizing the conveyor belt moving at the speed of up to 3 meters per second, while the rugged design and industrial-grade casing allow up to 24/7 operation in demanding conditions. However, most importantly, it can serve as a stand-alone platform capable of autonomous operation, or provide high-class machine vision for larger, already existing industrial systems through data fusion.

Hence, the stereovision platform is frequently used in our customer projects as a great starting point for more complex or case-specific designs. Thanks to Antmicro’s comprehensive expertise in FPGA SoC, software, AI and hardware, we create smart computer vision systems that take advantage of our early-access to edge AI platforms and leverage Antmicro’s established position as a preferred design service provider for high-end edge computing devices. Reach out to us at contact@antmicro.com to find out how we can help you with your next innovative device.