Introducing Kenning, TVM and micro-ROS to the meta-antmicro Yocto layer

Published:

Topics: Edge AI, Open source tools

When building edge AI solutions for its customers, Antmicro usually creates dedicated operating system images, tailored for a customer’s particular product to ensure better security and control. Since such systems are often deployed on large fleets of devices, and in many cases updated Over-The-Air (OTA), it is crucial to have the following aspects in mind:

- Reproducibility - starting with the same versions of the system’s repositories, we always get the same system, to keep the development and deployment processes sane and easy to debug and profile.

- Performance and quality testing - when deploying AI applications on edge, we face various limitations such as memory shortage or low compute power. To account for this, various optimizations need to be applied that affect the overall performance and quality of models. It is crucial to test such optimized models in order to make sure they actually run as fast as expected and do not stray too much from the native model in terms of quality.

- Automation - system deployment and OTA updates should be based on automated Continuous Integration pipelines to reduce human error and allow unlimited scaling.

To fulfill the above requirements, we use the Yocto build system, which treats system files, system configuration and applications’ building scripts as code. Using Yocto, it is easy to implement CI pipelines creating the whole operating system from scratch, and deploy the system image in the cloud.

As already mentioned in a previous blog note, we released our own Yocto layer called meta-antmicro, which contains configuration files for building reproducible embedded Linux system images (often called board support package, or BSP) along with various recipes for software and libraries, including machine learning frameworks and runtimes that we commonly use in our AI-based solutions.

Since this last blog note, we have made several major updates to the meta-antmicro layer, introducing new libraries for edge AI and robotic solutions which will be presented here together with an example use case.

What is meta-antmicro?

Yocto-based systems have a treelike structure composed of elements called recipes. Recipes in Yocto are grouped into layers whose primary purpose is to enhance existing recipes, provide target-specific settings and definitions, or provide additional meta-layers. Such a hierarchical approach in defining Yocto build flows makes it scalable, modular and reusable for any hardware platform.

The meta-antmicro Yocto layer first started as a layer with various edge AI applications developed for demonstration purposes, but now functions as a base layer for the various software, utilities, libraries, and system configurations Antmicro commonly uses in its commercial projects, spanning many hardware platforms and applications.

The meta-antmicro layer provides:

- recipes for system utilities, such as pyvidctrl for camera manipulation,

- recipes for libraries and tools used for machine learning purposes (especially deep learning), like darknet,

- enhancements for various recipes regarding the Jetson platforms,

- configuration files for building BSPs such as darknet object detection with ImGUI-based interface, that can be deployed on Jetson platforms.

├── meta-antmicro-common

│ ├── classes

│ ├── conf

│ ├── README.md

│ ├── recipes-devtools

│ ├── recipes-python

│ ├── recipes-support

│ └── recipes-xfce

├── meta-jetson

│ ├── classes

│ ├── conf

│ ├── README.md

│ ├── recipes-bsp

│ └── recipes-software

├── meta-jetson-alvium

│ ├── conf

│ ├── README.md

│ └── recipes-bsp

├── meta-microros

│ ├── conf

│ ├── README.md

│ ├── recipes-agent

│ └── recipes-support

├── meta-ml

│ ├── conf

│ ├── README.md

│ ├── recipes-core

│ └── recipes-python

├── meta-ml-tegra

│ ├── conf

│ ├── README.md

│ ├── recipes-core

│ ├── recipes-libraries

│ ├── recipes-python

│ └── recipes-software

├── meta-rdfm

│ ├── classes

│ ├── conf

│ ├── README.md

│ └── recipes-rdfm

└── meta-rdfm-tegra

├── classes

├── conf

├── README.md

├── recipes-bsp

└── recipes-rdfm

While developing such systems we create and enhance recipes for various open source libraries, tools and software, many of which are available in Antmicro Open Source Portal.

Support for Yocto’s Kirkstone release

In April 2022 the Yocto Project announced the 4.0 Kirkstone Long Term Support release which is a sensible base to work from when developing products that are going to be deployed in the field and operate throughout a longer period of time.

This update contains upgrades to the latest versions of many of the software components, including glibc 2.35, Linux kernel 5.15, Python 3.10 and ~300 other recipe upgrades. But there is more: this release also includes fixes for reproducibility issues with golang and rust-llvm, thanks to which the recipes in OpenEmbedded-Core are now fully reproducible.

In addition to security and stability improvements, the Yocto Project updated their recipes’ build tool, called bitbake, introducing some changes to the syntax and variable naming convention of the recipes, making them more intuitive.

With the changes brought by the Kirkstone release, meta-antmicro has also been updated. The release allowed us to update some of the recipes to a newer version, introduce the new, more intuitive syntax and secure their support for the near future.

Introduction of Kenning recipe to meta-antmicro

To support a large variety of edge AI platforms we also introduced a recipe for Kenning to the meta-antmicro layer, making it possible to deploy Kenning on different platforms in a reproducible way.

Kenning is Antmicro’s deep learning framework creating deployment flows and runtimes for Deep Neural Network applications for various target hardware. It provides modular execution blocks for:

- dataset management,

- model training,

- model compilation,

- model evaluation and performance reports,

- model runtime on target device,

- model input and output processing (i.e. fetching frames from camera and computing final predictions from model outputs).

These blocks can be used seamlessly regardless of the underlying frameworks used to perform the above-mentioned steps. More information on its fundamentals can be found in a blog note on deploying deep learning models on edge with Kenning.

With Kenning available in meta-antmicro, it is possible to reliably test various models on multiple platforms and benchmark them to determine the most suitable solution for a particular use case. Furthermore, Kenning has the ability to simplify the exchange of runtime blocks depending on target hardware.

In addition to model benchmarking and exchange of runtime blocks, we can deploy simple applications using the Kenning Runtime API.

Introduction of new machine learning frameworks to meta-antmicro

Along with the update, a recipe for the Apache-TVM framework, a deep-learning compiler supported by Kenning, was also added, which allows creating optimized model runtimes for a large number of deployment environments:

- CPUs supported by LLVM

- CUDA targets, with support for CUBLAS, CUDNN and TensorRT libraries

- Hexagon accelerator

- Apache VTA accelerator

- RISC-V CPUs

- OpenCL-enabled targets

- etc…

meta-antmicro includes a default recipe for the Apache-TVM framework, allowing users to build a CPU runtime of the library located under the meta-ml sublayer, as well as a CUDA-enabled runtime located in the meta-ml-tegra sublayer.

We have also added support for ONNX Python library - most of the existing machine learning frameworks support converting their models to the Open Neural Network Exchange format (.onnx), so introducing this framework to the Yocto system allows us to skip adding heavy frameworks meant for training the models to the embedded systems and use ONNX models with lean, target-optimized runtimes.

Introduction of micro-ROS to meta-antmicro

ROS 2 (Robotic Operating System) is a framework allowing implementation of large modular applications in the form of intercommunicating nodes. Each node acts as a separate program that can be implemented in C++ or Python. The nodes can communicate with one another in a one-to-one (client-service) or one-to-many (publisher-subscribers) manner, and can be distributed across multiple devices.

In a previous blog note we mentioned the additions to meta-antmicro layer supporting micro-ROS libraries and software that allow us to implement and run a node on a microcontroller, which can then communicate via UART or other interface with the rest of the ROS 2 system. These additions include recipes for:

- micro-ROS-Agent,

- Micro-XRCE-DDS-Agent,

- micro_ros_msgs,

- their dependencies.

While ROS 2 and its dependencies are provided by the meta-ros layer, micro-ROS support can be added with the meta-microros sublayer of meta-antmicro. This allows us to create even more sophisticated edge AI solutions, which can also communicate with microcontrollers collecting data from sensors or performing other kinds of simple processing.

For Renode-based demonstration of ROS 2 and micro-ROS usage, please refer to the dedicated blog note.

Other additions to meta-antmicro

Apart from the above-mentioned major frameworks, we have also added their various dependencies including libraries such as:

- scipy,

- scikit-learn,

- threadpoolctl,

- beniget,

- joblib.

We have also introduced a sublayer called meta-jetson-alvium, which introduces support for Allied Vision cameras to Jetson modules, further building upon Antmicro’s portfolio of open source Alvium projects, which already includes Jetson drivers and a flexible CSI adapter.

For more information on newly introduced sublayers, their features and dependencies check the README for meta-antmicro layer, as well as READMEs for all of the sublayers.

Using the Kenning and meta-antmicro for edge AI inference benchmarking - example

The recipe for Kenning in the meta-antmicro layer provides the ability to perform inference benchmarking using edge AI scripts that are present in Kenning’s repository. To illustrate the usefulness of the framework, this section will walk you through how to do this for a Jetson Xavier AGX devkit - we will soon follow up with some examples for the new and exciting AGX Orin as well, so stay tuned!

Running inference testing scripts with Kenning requires the following dependencies to be installed:

As was mentioned in one of the previous blog posts, the system-releases directory of the meta-antmicro layer contains manifest files needed to set up the entire project. You can fetch all the necessary code with:

repo init -u https://github.com/antmicro/meta-antmicro.git -m system-releases/darknet-edgeai-demo/manifest.xml

repo sync -j`nproc`

To start building the BSP, run the following commands:

source sources/poky/oe-init-build-env

PARALLEL_MAKE="-j $(nproc)" BB_NUMBER_THREADS="$(nproc)" MACHINE="jetson-agx-xavier-devkit" bitbake kenning-tvm-image

When the build process is complete, the resulting image will be stored in the build/tmp/deploy/images/jetson-agx-xavier-devkit folder. You can unpack the tegraflash package, and then flash the board by putting the hardware in recovery mode and running the following command:

sudo ./doflash.sh

After successfully flashing and booting the hardware, establish an Ethernet connection with the host device and run the following script:

#!/bin/bash

python3 -m kenning.scenarios.inference_server \

kenning.runtimeprotocols.network.NetworkProtocol \

kenning.runtimes.tvm.TVMRuntime \

--host 0.0.0.0 \

--port 12345 \

--packet-size 32768 \

--save-model-path /home/root/Desktop/compiled-model.tar \

--target-device-context cuda \

--input-dtype float32 \

--verbosity INFO

On the host device, clone Kenning’s repository and checkout to the recommended revision:

git clone https://github.com/antmicro/kenning.git

cd kenning

git checkout 1195c75547dfe8f4df48437ea6029e8ef9f22dfc

Make sure there is an Ethernet connection with the target device and run one of the edge AI scripts presented in Kenning’s repository. Don’t forget to add the --download-dataset flag to the script for the first run:

./scripts/edge-runtimes/jetson-agx-xavier-tvm-pytorch/client.sh <ip-addr-for-target>

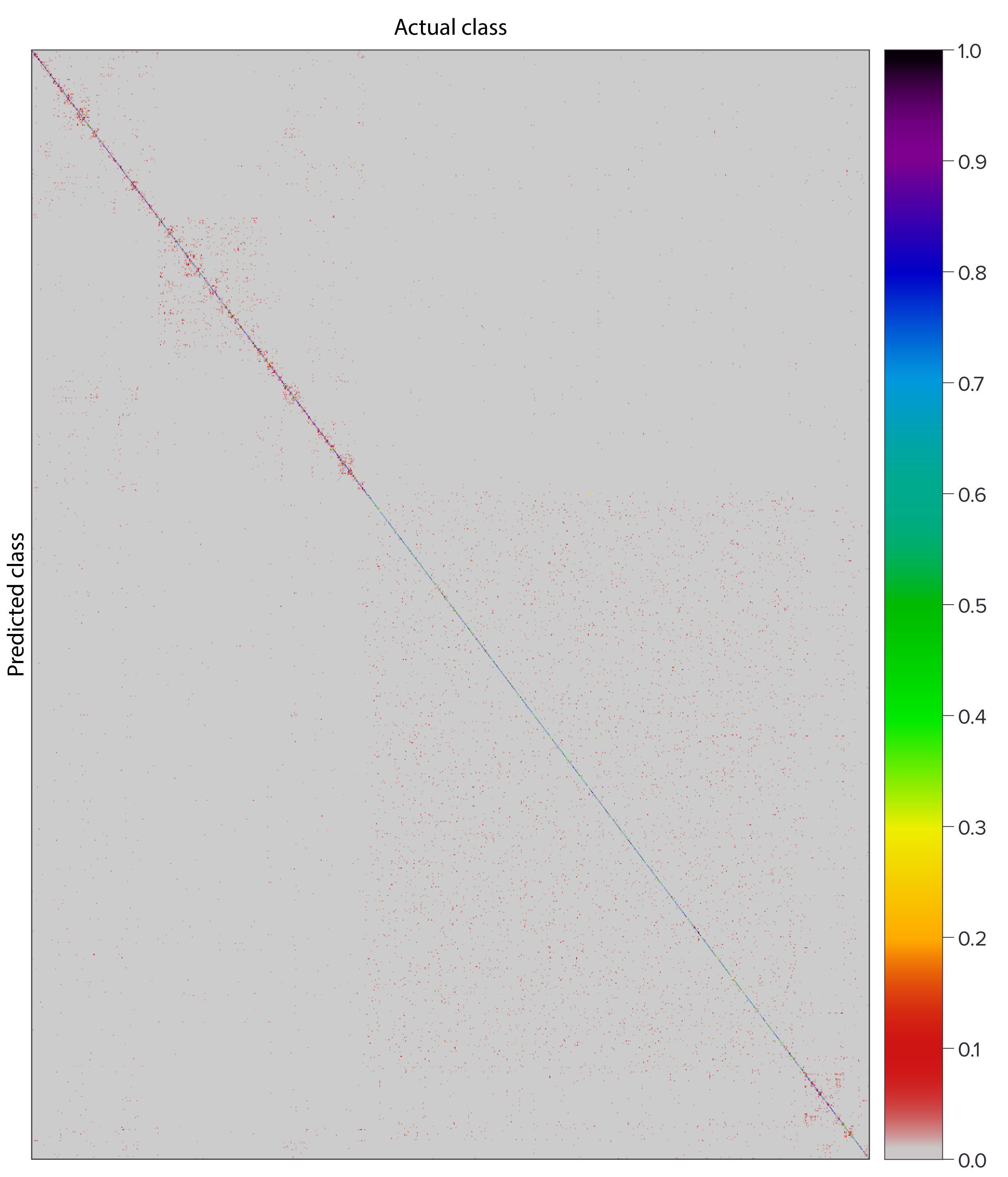

Once inference testing is finished, the generated JSON file with performance and quality benchmarks can be found under build/jetson-agx-xavier-tvm-pytorch.json. You can use this file to generate a report of our inference testing with:

python3 -m kenning.scenarios.render_report \

build/jetson-agx-xavier-tvm-pytorch.json \

"Jetson AGX Xavier classification using TVM-compiled Pytorch model" \

docs/source/generated/jetson-agx-xavier-cpu-tvm-pytorch-classification.rst \

--img-dir docs/source/generated/img/ \

--root-dir docs/source/ \

--report-types \

performance \

classification

The generated report will be located under the docs/source/generated/jetson-agx-xavier-cpu-tvm-pytorch-classification.rst path and can be later rendered into HTML format to e.g. include it into your project’s documentation.

Building reproducible edge AI solutions

The recent changes in the meta-antmicro layer, along with its update to the Kirkstone LTS Yocto release, allow Antmicro to use a wider range of tools for creating reproducible and comprehensive edge AI solutions. The support for micro-ROS extends the capabilities of the meta-antmicro layer, allowing the Yocto-based system to easily interface with microcontrollers in ROS 2-based applications.

The Kenning recipe, along with a range of other recipes for ML frameworks and libraries in meta-antmicro, enables consistent benchmarking of any ML models applying various optimization techniques on a wide choice of target platforms, to find the best-fitting combination for a given use case.

If you are looking to build your own reproducible Yocto-based edge AI system with quality benchmarking and CI support, reach out to us at contact@antmicro.com and find out how Antmicro can help.