With a rapid increase in interest in adding edge Machine Learning capabilities to consumer-facing as well as industrial devices - brought about by the rapid advances in deep learning and now also LLMs, the increasing compute capabilities of embedded SoCs - efficiency in designing, prototyping and testing such solutions has become more important than ever. Leading the charge, Antmicro has been developing Kenning, an open source tool that seamlessly interconnects different DNN optimization and deployment frameworks and provides developers with robust reports on model performance and quality.

When developing open source frameworks like Kenning, Antmicro always looks at the bigger picture through integration with other tools. Kenning already works in tandem with the Pipeline Manager tool to visualize, edit, validate and run ML pipelines and Renode, Antmicro’s open source embedded system simulation framework, for prototyping, CI testing and HW/SW co-development of entire ML pipelines.

In this note, we dive into the most recent updates to Kenning aimed at simplifying and optimizing the workflows in edge AI scenarios and, at the same time, lowering the overall barrier to entry to using Kenning on its own and along with other tools.

Kenning CLI and HTML reports

Significantly lowering the barrier to entry for Kenning, we have implemented the kenning command granting access to all of Kenning’s features via the command line. The kenning command features autocompletion, significantly simplifying app usage (the user needs to configure it using kenning completion).

In addition to the previously available Markdown reports, HTML reports are now available as well, letting users quickly look up model comparisons and model performance metrics.

The kenning command can be used with the following modifiers when working with models:

kenning flow- run Kenning runtimeskenning optimize- optimize a modelkenning test- test/evaluate/benchmark a modelkenning report- generate Markdown and HTML reportskenning fine-tune-optimizers- run a grid-based search for the best optimizations for a given model and devicekenning train- train a given modelkenning visual-editor- run Pipeline Manager

What is more, kenning optimize, kenning test, and kenning report subcommands can be combined into e.g. kenning test report, kenning optimize test, kenning optimize test report, etc.

Kenning optimizations and runtime blocks check

Kenning also features the following, more general commands, granting users easy access to information about their available resources and the Kenning framework itself:

kenning list- list available (and runnable) models, runtimes, and optimizers in Kenningkenning completion- configure autocompletion for the toolkenning search- search for classes using fuzzy searchkenning info- display details of a given Kenning-based class (optimizer, model, runtime, etc.):- Documentation

- Parameters

- Dependencies

- Availability and necessary prerequisites of a block

- kenning cache - manage resources downloaded by Kenning

Downloadable resources and custom URI schemes

With the latest updates to Kenning, it is also possible to use HTTPS, HTTP, custom URI schemes and more to download resources (models, compilers, utilities, datasets, etc.) necessary to run a particular scenario in Kenning.

This allows you to download a model directly from a given link, or import it from other remote sources, without the need to prepare it locally beforehand. What is more, scenarios involving multiple files like Renode scripts, compilers, binaries etc. can now be boiled down to a single JSON file and easily reproduced anywhere you need them.

As an example, you can download a model by using the ResourceManager.get_resource method, where the parameters are a URI of the resource and optional output path where the file should be saved:

model_path = pathlib.Path('./model.h5')

ResourceManager().get_resource('kenning:///models/classification/magic_wand.h5', model_path)ResourceManager supports the non-standard kenning:// scheme, custom themes defined by the user, and several default ones:

http://,https://file://- provides the absolute path to the file in the filesystemkenning://- a scheme for downloading Kenning-specific resources fromhttps://dl.antmicro.com, where e.g.kenning:///models/classification/magic_wand.h5resolves tohttps://dl.antmicro.com/kenning/models/classification/magic_wand.h5gh://- used for downloading files from GitHub repositories, e.g.gh://antmicro:kenning-bare-metal-iree-runtime/sim/config/platforms/springbok.repl;branch=mainresolves tohttps://raw.githubusercontent.com/antmicro/kenning-bare-metal-iree-runtime/main/sim/config/platforms/springbok.repl.

Here is an example from a JSON scenario:

{

"model_wrapper":

{

"type": "kenning.modelwrappers.classification.tensorflow_pet_dataset.TensorFlowPetDatasetMobileNetV2",

"parameters":

{

"model_path": "kenning:///models/classification/tensorflow_pet_dataset_mobilenetv2.h5"

}

},

...

}Delegating optimization

The recent updates additionally let you delegate certain optimizations to the tested devices. It can be useful for optimizations that require a more powerful device, either with respect to memory usage or because a large-size dataset is required for fine-tuning, as it lets users run additional, hardware-specific optimizations on actual target hardware via delegation within a scenario.

This feature can also be used for certain optimizations that can only be executed on the target (e.g. embedded) device, like utilizing benchmarks from metrics to empirically find best-fitting implementations of certain operations appearing in the model for the particular platform.

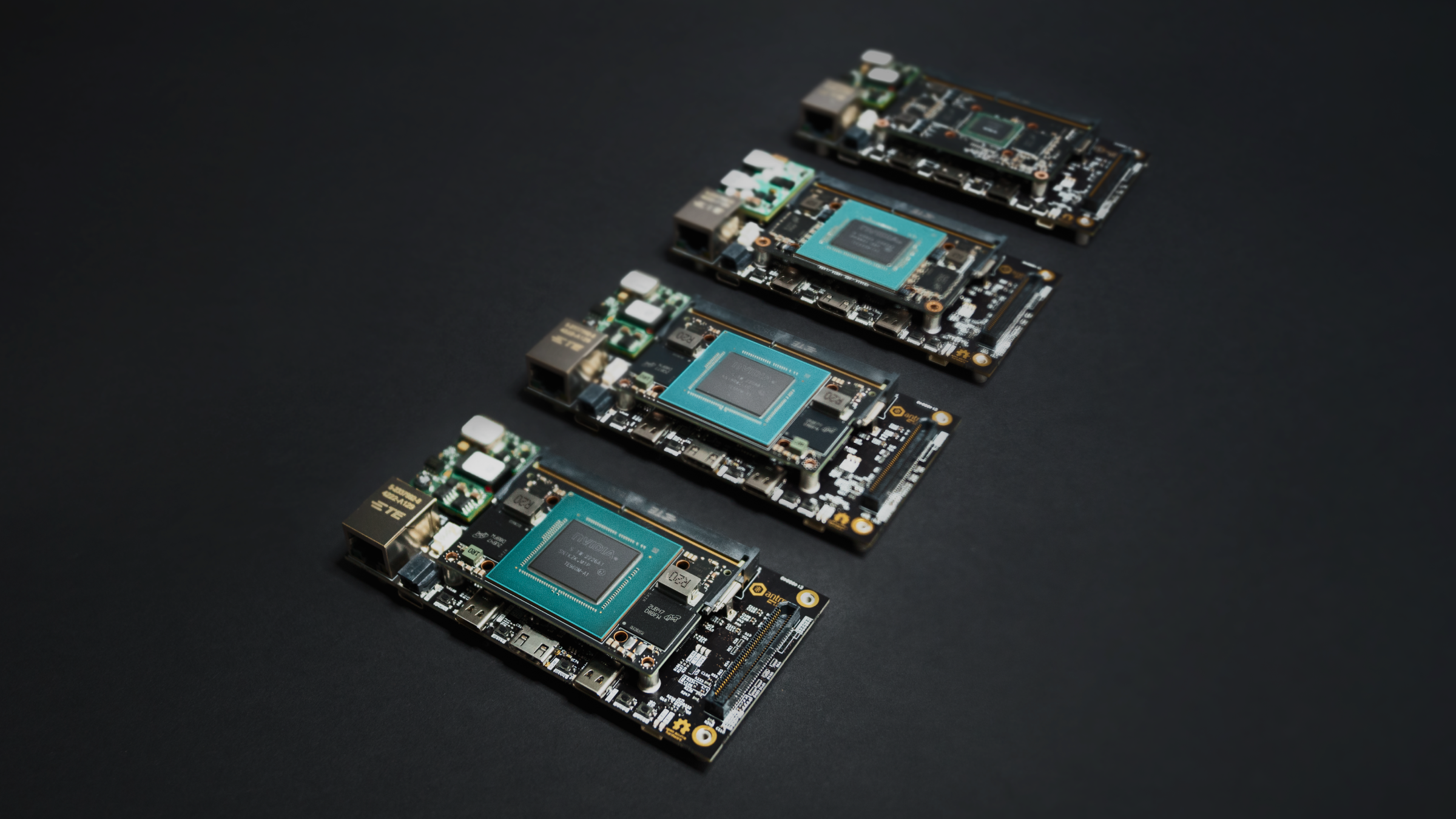

These optimization capabilities can be especially useful on higher-end edge AI devices like the NVIDIA Jetson AGX Orin in industrial machine vision scenarios like the one described in a blog note published earlier this year.

Example usage gallery

In order to guide users through some of the most common scenarios for using Kenning, we have prepared a gallery of step-by-step breakdowns including setup for each scenario, possible commands and arguments, as well as a discussion of available metrics and results.

So far, the available scenarios are:

- Displaying information about classes available in Kenning

- Visualizing Kenning data flows with Pipeline Manager

- Structured pruning for PyTorch models

- Model quantization and compilation of the model using TFLite and TVM

- Unstructured pruning for TensorFlow Models

Add a GUI to your workflows with Pipeline Manager

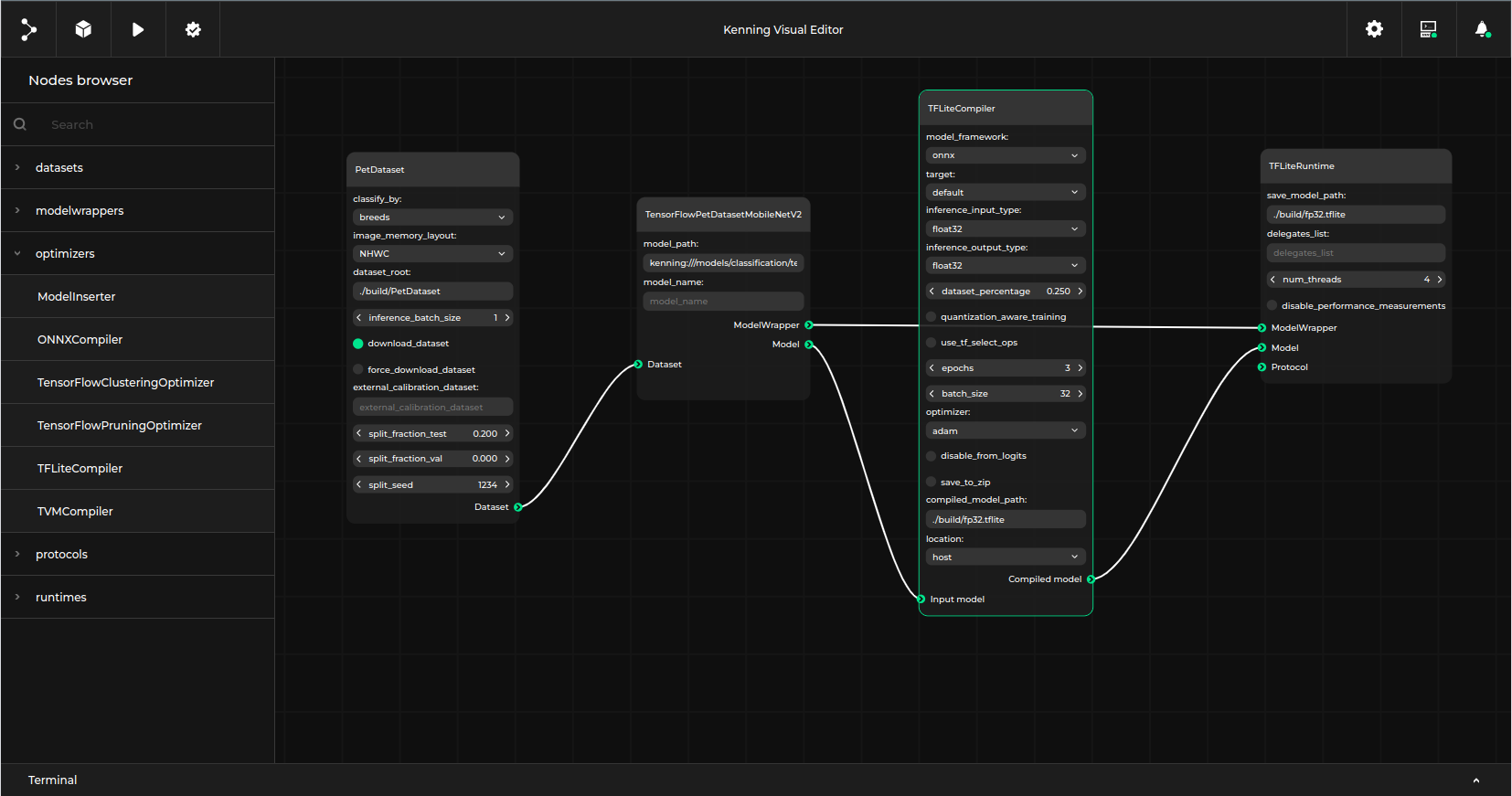

Parallel to the development of Kenning, Antmicro is constantly improving Pipeline Manager, a GUI tool for visualizing, editing and validating graph-like workflows, as well as running them using Kenning or other integrated applications as a back end. With the recent updates, the tool is accessible in Kenning by using a single command. Below, you can see a sample Kenning workflow in the Pipeline Manager editor:

While Pipeline Manager was initially developed for working with Kenning dataflows, it can be integrated and used with any application that uses graph-like or flow-like data, e.g. the Visual System Designer, Antmicro’s open source embedded system design tool. You can read about the latest improvements in Pipeline Manager in one of Antmicro’s recent blog notes.

Make Kenning a part of your open source toolkit

With the UX optimizations and new capabilities described above, as well as the latest additions to Pipeline Manager described in another one of Antmicro’s blog notes, deploying and optimizing DNNs, also as part of larger open source toolkits, has become more accessible than ever.

If you want to benefit from a comprehensive, open source workflow for developing, optimizing, testing, and deploying AI-enabled edge computing solutions, reach out to us at contact@antmicro.com and learn how you can combine Kenning with simulation in Renode and other parts of Antmicro’s embedded system design toolkit.