NVIDIA’s recently announced “Super” update - a software enhancement enabling additional power modes for the Jetson SoCs - is great news for Antmicro’s customers building powerful edge devices with a small footprint using our open source Jetson Orin Baseboard, now revised to fully support the update, as a base.

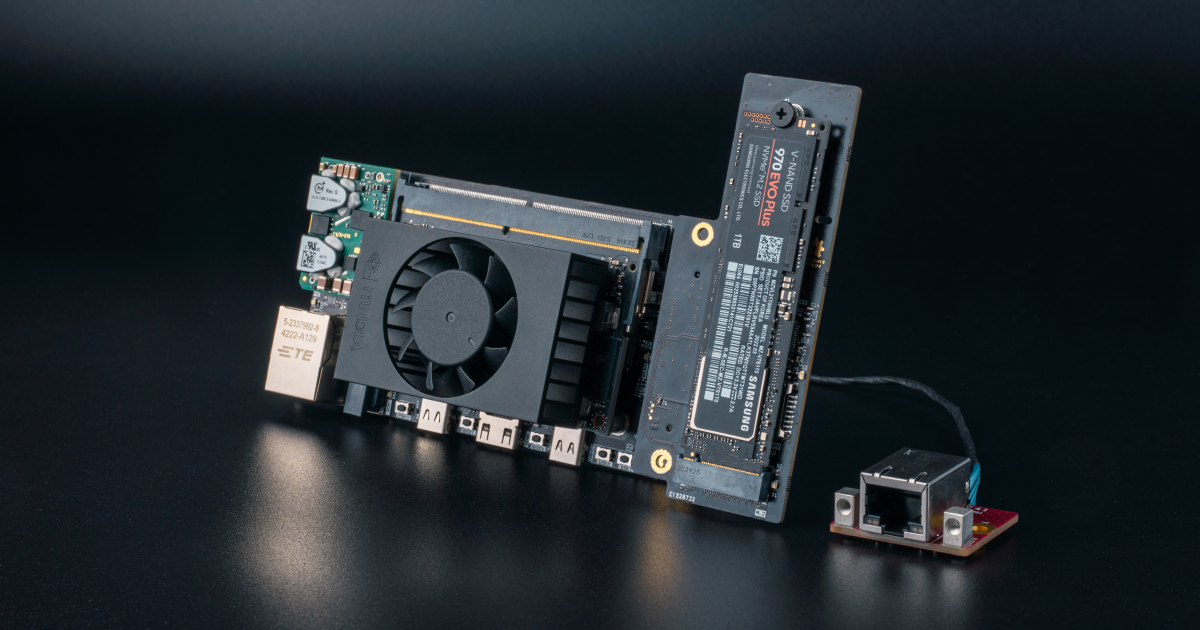

The update enables an additional power mode (25W) for the Jetson Orin Nano Super and one (40W) for the Jetson Orin NX Super by altering the CPU clocking frequency. It is worth noting that for the Jetson Orin NX Super, the minimum power supply voltage needs to be at least 8V (as compared to 5V supported by the pre-Super variant) - a requirement satisfied by the latest revision of Antmicro’s Jetson Orin Baseboard that we recently published to GitHub.

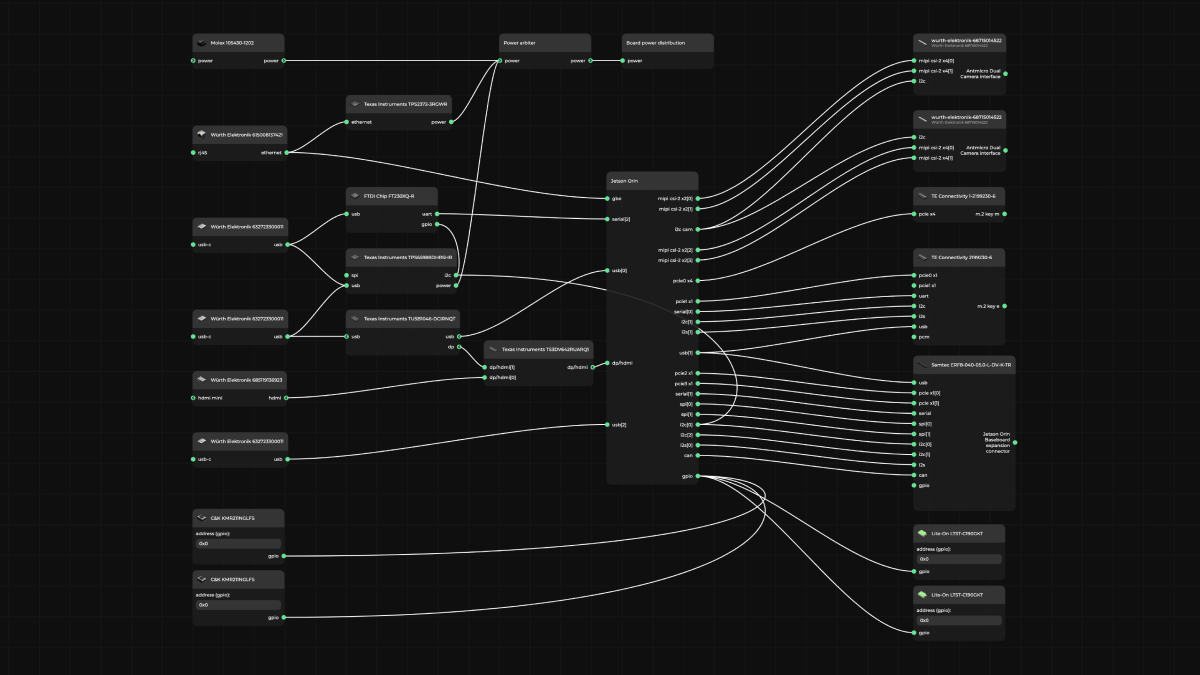

(For an interactive version of the diagram, visit the desktop version of the website)

With up to a 1.7x AI model performance bump with the same hardware at a reduced price, combined with sparse matrix optimization and the model/runtime optimization and deployment capabilities of our open source Kenning framework, the “Super” update makes state-of-the-art edge compute even easier to deploy in practice.

Vertically integrated open source stack for fast development of highly-optimized ML systems

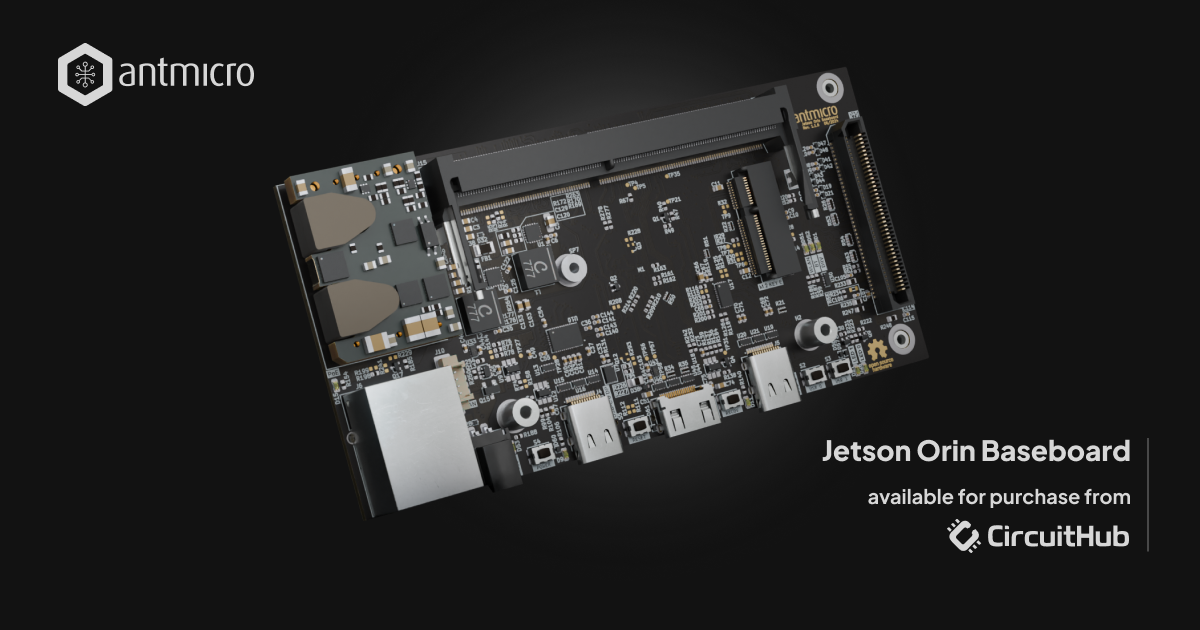

Antmicro has a proven track record of developing products with Jetson series devices and an ever-growing portfolio of open source hardware and software for rapid development and prototyping of edge AI solutions. Revision 1.3.0 of our popular Jetson Orin Baseboard is a great starting point for developing customized, high-end edge AI devices based on the “Super” SoMs. The baseboard exposes the interfaces implemented in the Orin SoMs in a small form factor, provides a thoroughly tested and optimized platform for further miniaturization or feature expansion and is readily compatible with our open source hardware accessories, paving the way to a variety of computer vision solutions.

The Jetson-based devices we build can be paired with the meta-antmicro Yocto layer for creating reproducible AI systems, as well as our open source Protoplaster hardware and BSP testing tool. Antmicro’s toolkit also includes RDFM (Remote Device Fleet Manager) that enables automated, data-efficient OTA updates and management over AI models (deployed on the edge) and runtimes for fleets of devices.

On the AI runtime and model optimization and deployment front, our open source Kenning framework offers LLM optimizations designed to leverage sparse matrix operations enabled by NVIDIA products including the Super Nano and Super NX SoMs. Kenning also provides a graphical layer for development of optimization and deployment flows, automatic pipeline optimization and extensive resources for benchmarking on Jetson platforms.

Additionally, with the help of Multi-Process Service (MPS) also introduced along with the “Super” update, we can observe substantial gains in performance for AI systems involving multiple processes deploying AI models in parallel on a GPU: benchmarks running matrix multiplications as parallel processes demonstrated 20-30% faster simultaneous processing when running 4-16 parallel processes compared to not using MPS. This is especially useful for scenarios involving parallel execution, e.g. for ROS 2 nodes analyzing frames from cameras.

Get ahead of the curve with Nano Super and NX Super-based solutions

NVIDIAs “Super” update (resulting in a rebranding of the Orin series to Jetson Orin Nano Super and NX Super) together with Antmicro’s rapid enablement of support for the platform are lowering the entry bar to powerful edge compute once again. For customers looking to be first to deploy those advantages to market, Antmicro’s services offer fast turnaround, full ownership, customizability and transparency in building AI computer vision solutions, advanced industrial robots, systems for AI-enabled space use cases and more.

To take advantage of the advanced compute unlocked by the Super update through Antmicro’s open source hardware and software portfolio of services around building commercial edge AI devices, contact us and describe the needs of your project at contact@antmicro.com.